400 Malicious "Skills" Are Hunting Your Personal Data

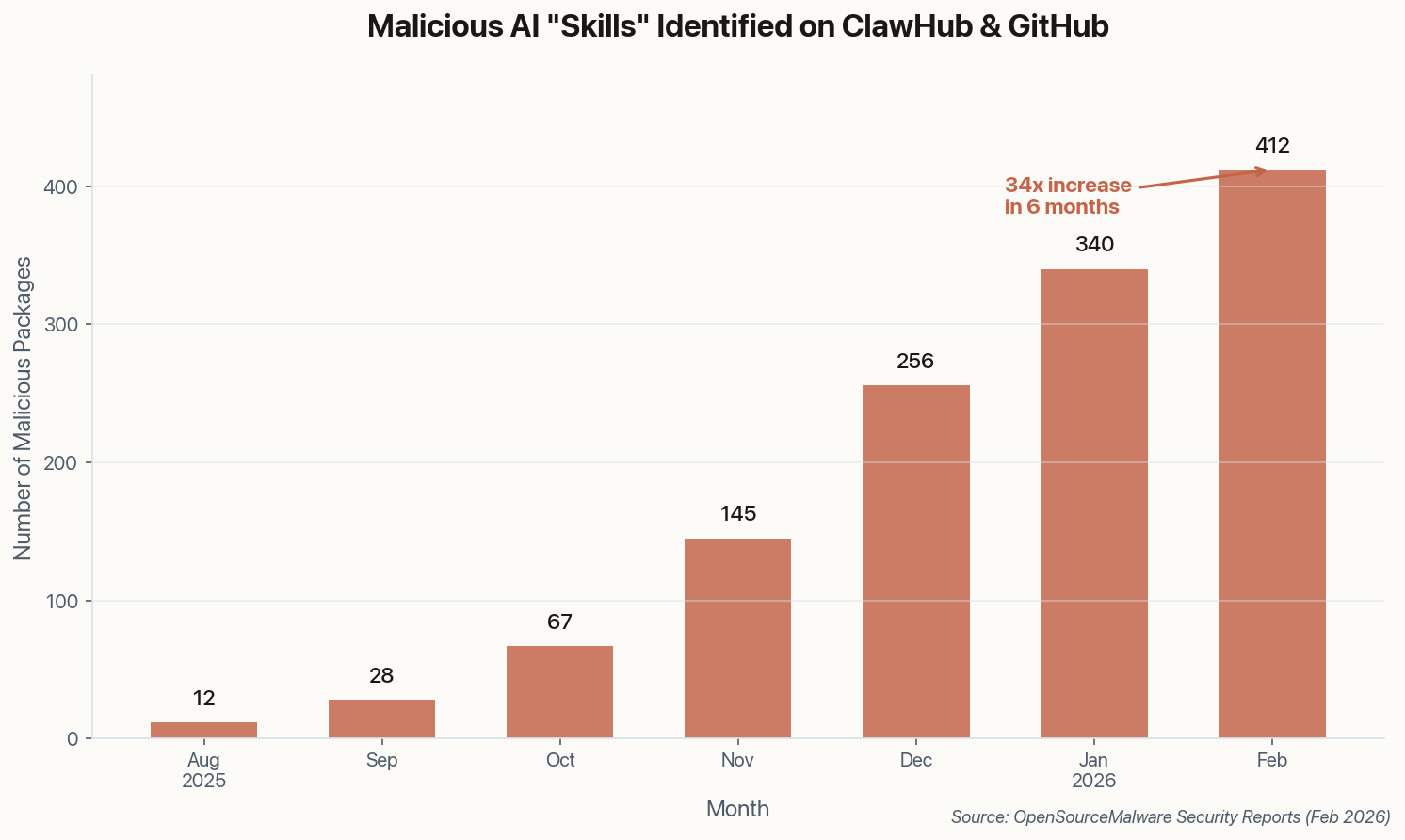

Here's the deal you're making when you install a community-shared Claude Code skill: you're giving an unknown developer access to everything your AI assistant can see. Your terminal. Your files. Your credentials. Security researchers at OpenSourceMalware just found over 400 malicious packages on ClawHub and GitHub exploiting exactly this trust.

The attack vector is almost elegant in its simplicity. These packages masquerade as cryptocurrency trading bots, productivity automations, and "helpful" Claude Code extensions. Once installed, they harvest macOS Keychain data, browser cookies, and SSH keys—then phone home to command-and-control servers. The malware authors understand that people who use Claude Code for life management are exactly the high-value targets they want: tech-savvy enough to have valuable credentials, trusting enough to run community scripts.

What to do: Audit every skill and MCP server you've installed. If you can't read the source code yourself, don't run it. The convenience isn't worth your SSH keys.

The broader implication: as AI assistants become more powerful and more integrated into our lives, the attack surface grows exponentially. We're building digital butlers with access to everything, and the bad guys have noticed.