Davos Pours Cold Water on the Superintelligence Hype

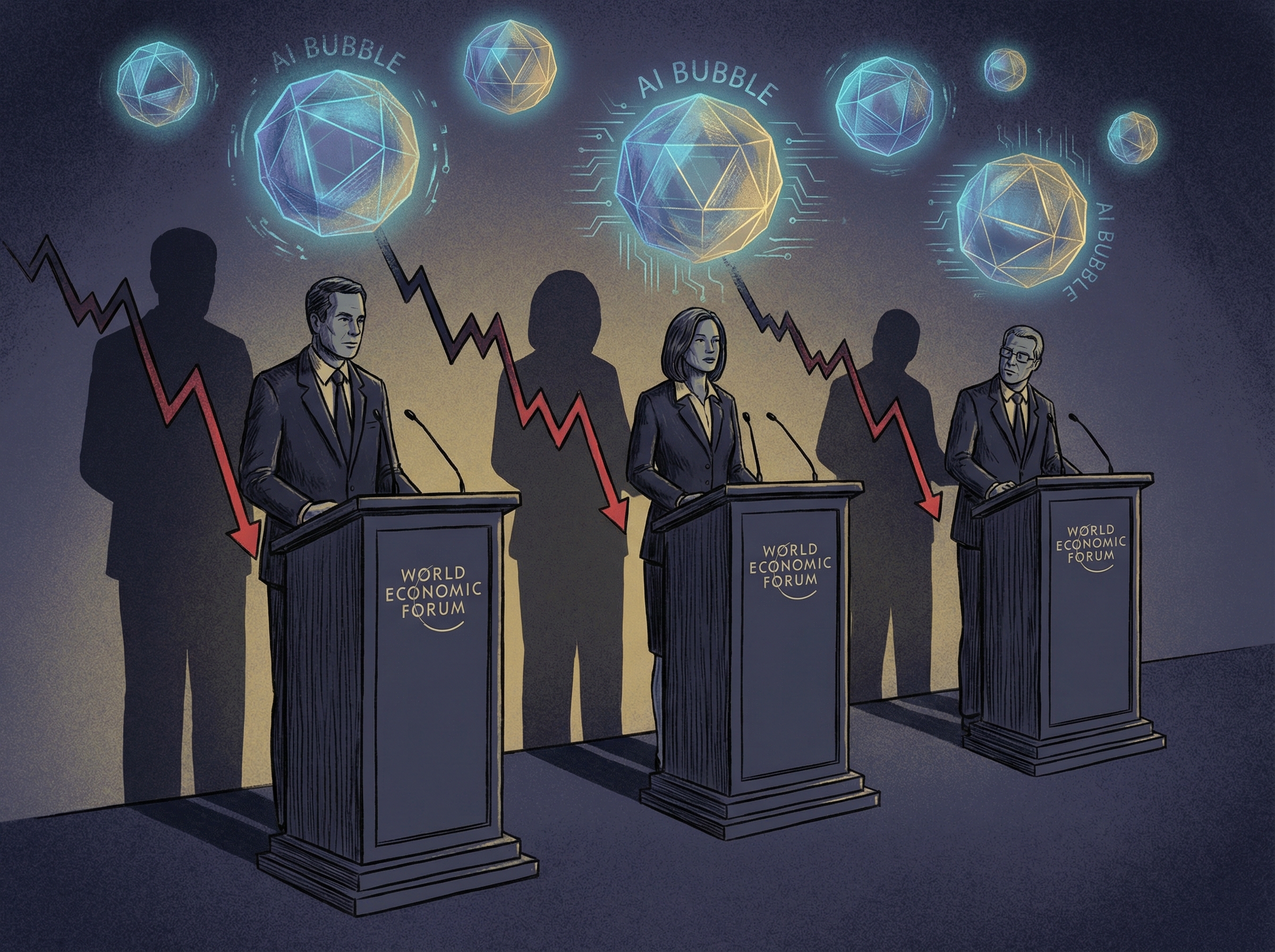

For three years running, Davos has been an AI victory lap—founders announcing billion-dollar raises while snow-dusted executives nodded along about "transformative potential." This year was different. The central theme wasn't possibility but skepticism: investors asking hard questions about when these models will actually make money, not just headlines.

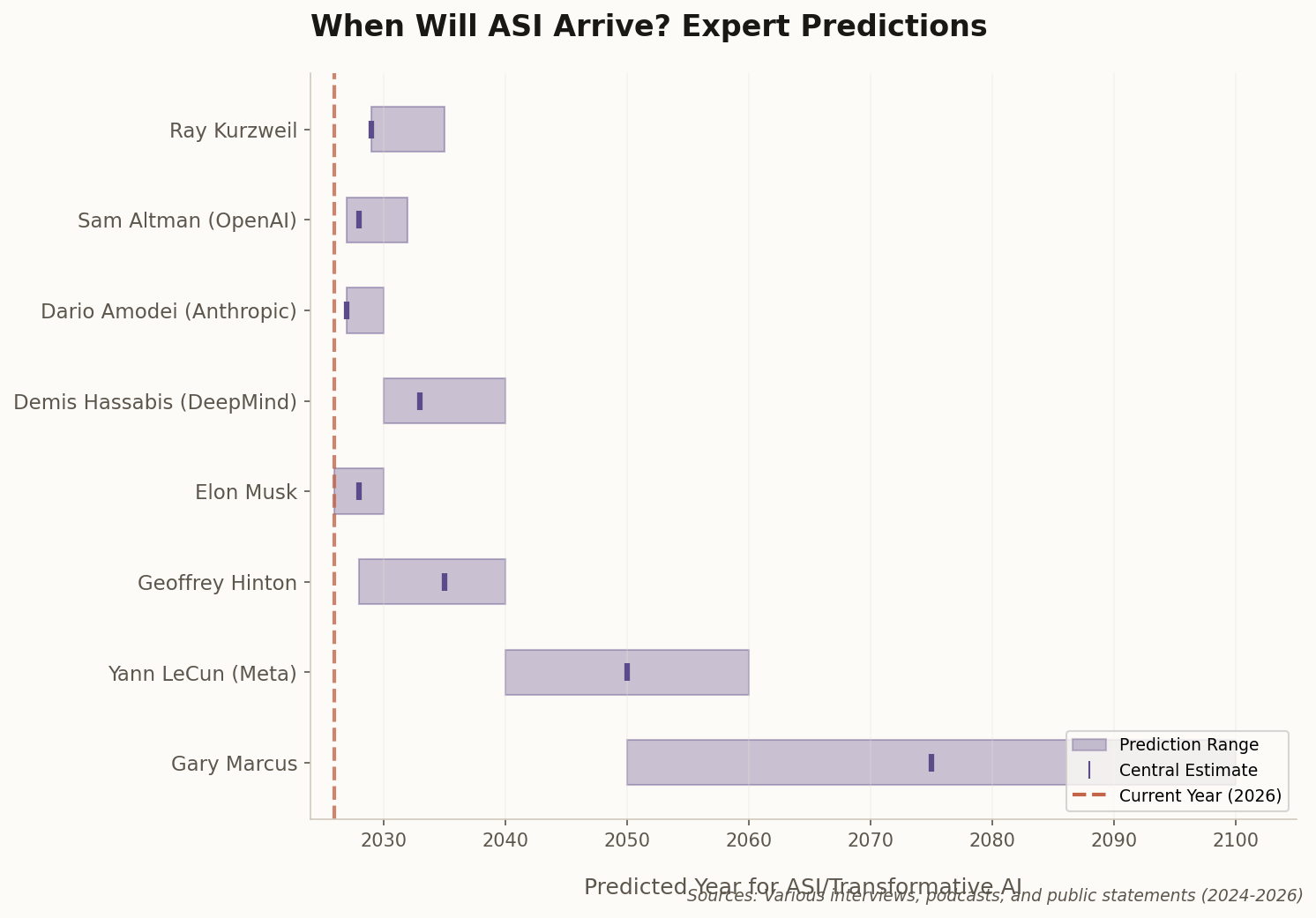

The conversation has shifted from "generative hype" to what organizers called "the hard reality of implementation." Reuters reported that investors are becoming "more discerning," actively punishing companies that spend on GPUs without showing clear ROI. Translation: the capital markets are losing patience with promises of superintelligence that arrive "any day now."

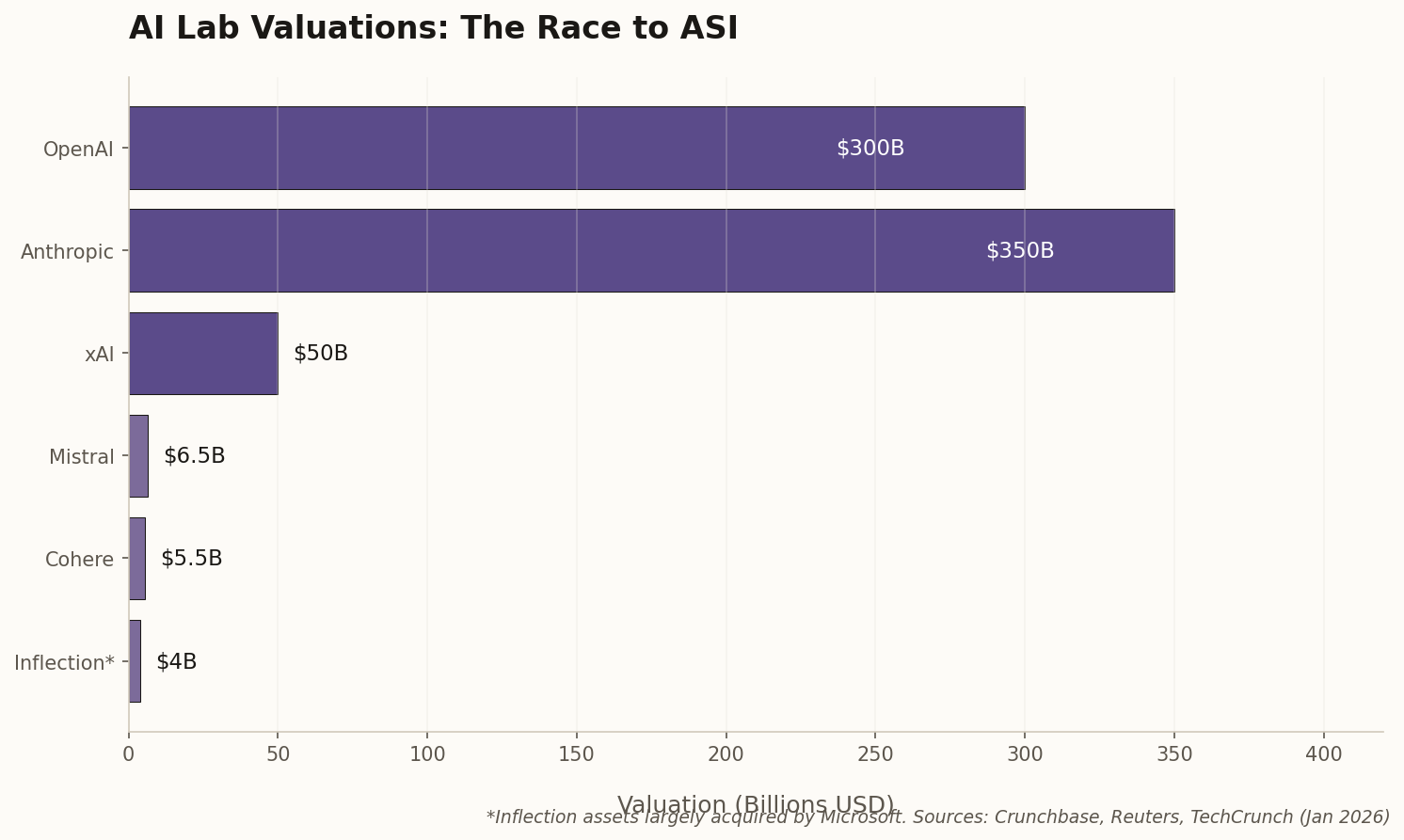

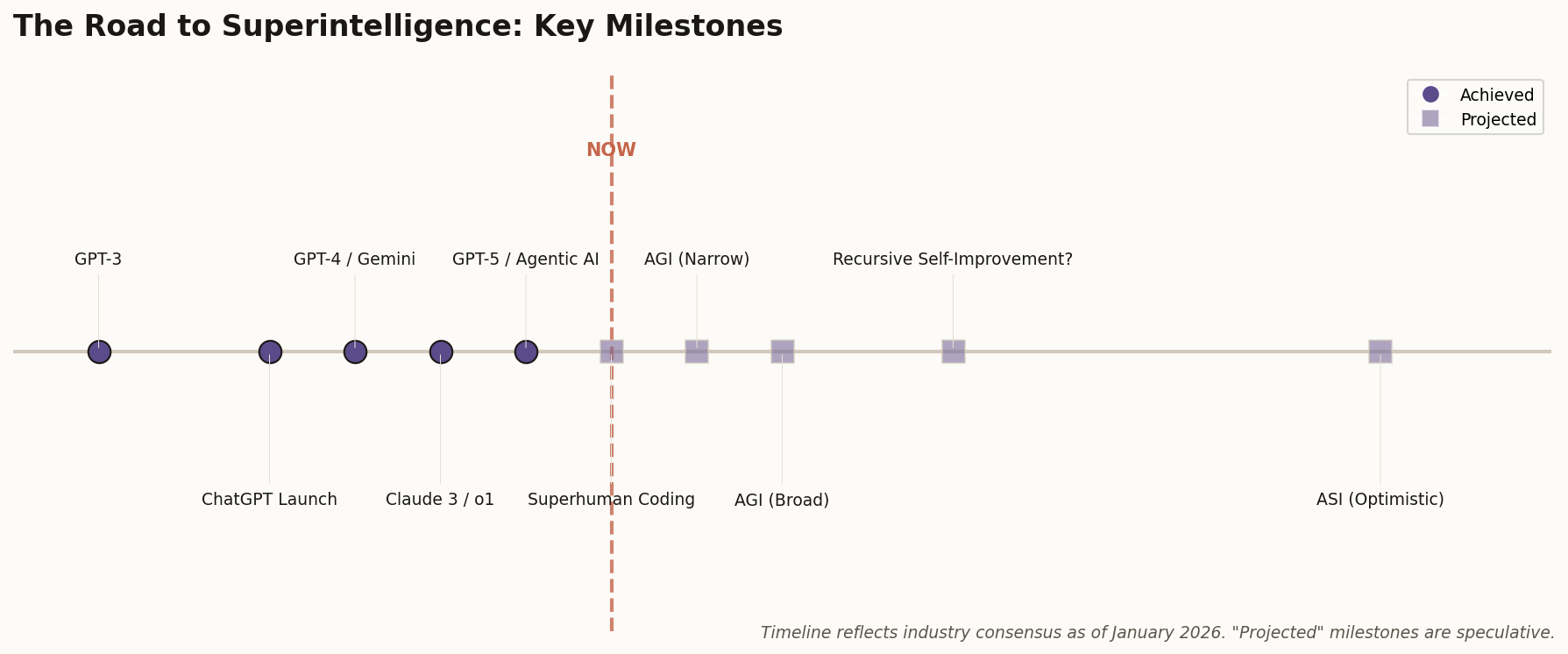

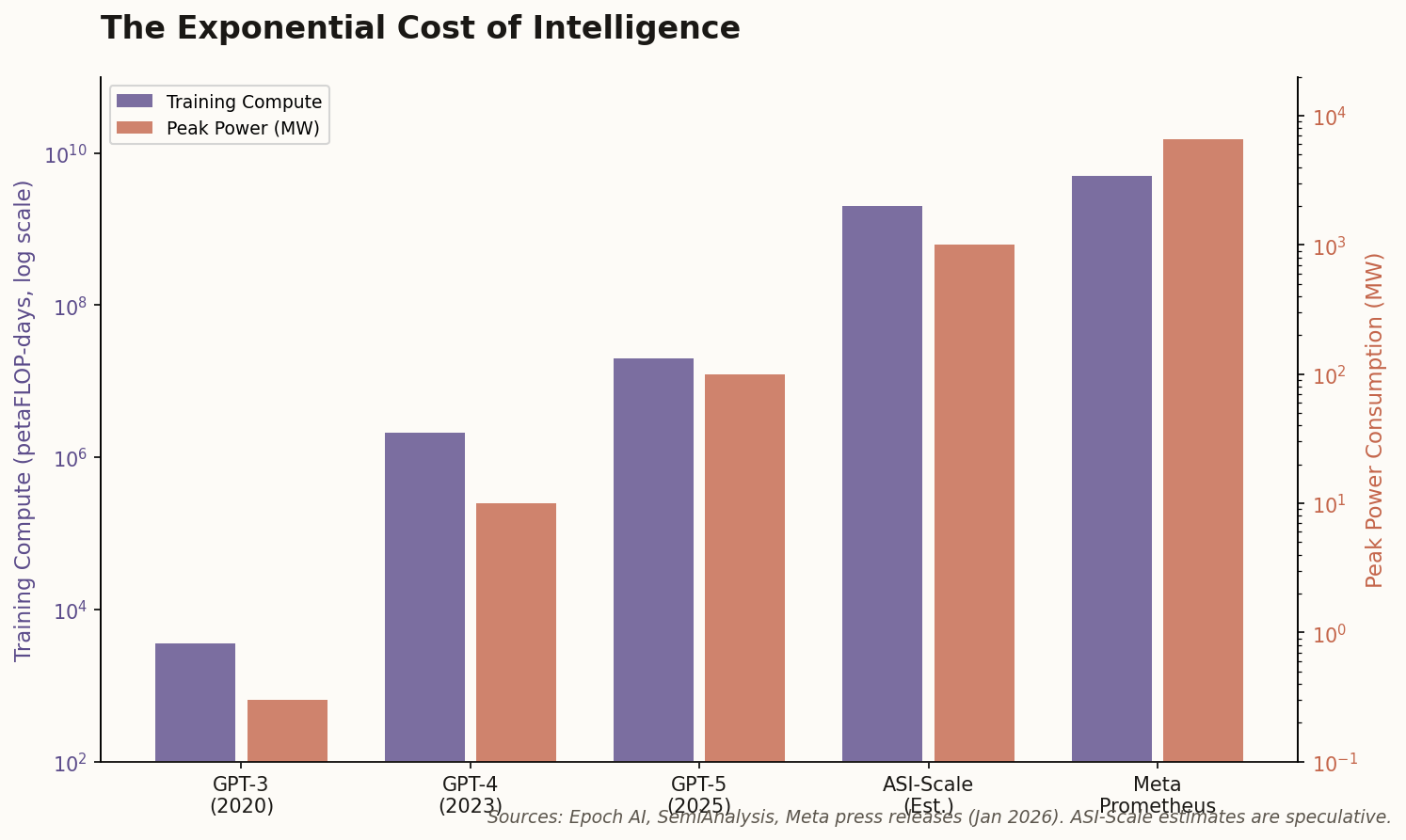

This matters because ASI requires money—lots of it. Meta's 6.6 gigawatt nuclear deal (see below) doesn't fund itself. If the "AI Bubble" narrative gains traction, the trillion-dollar clusters required for recursive self-improvement may never get built. The optimistic timeline assumes infinite capital. Davos just reminded us that capital has limits.