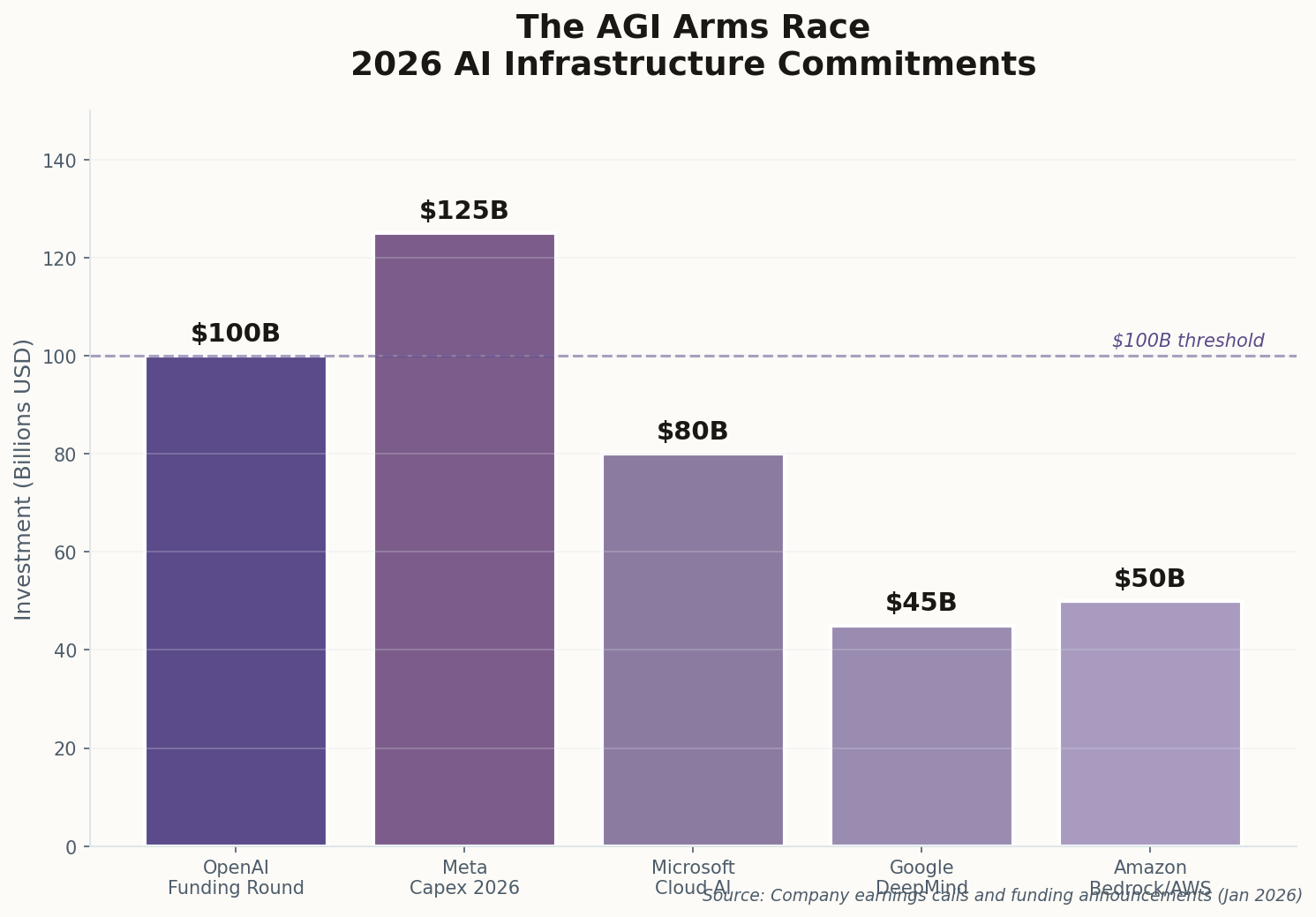

OpenAI Wants $100 Billion, and Amazon Is Writing the Check

The number is so large it deserves its own paragraph: $100 billion. That's what OpenAI is reportedly seeking in its latest funding round, with Amazon in advanced talks to contribute roughly half. If it closes, this would be one of the largest private technology investments in human history—more than the GDP of most countries, concentrated in a single company building a single technology.

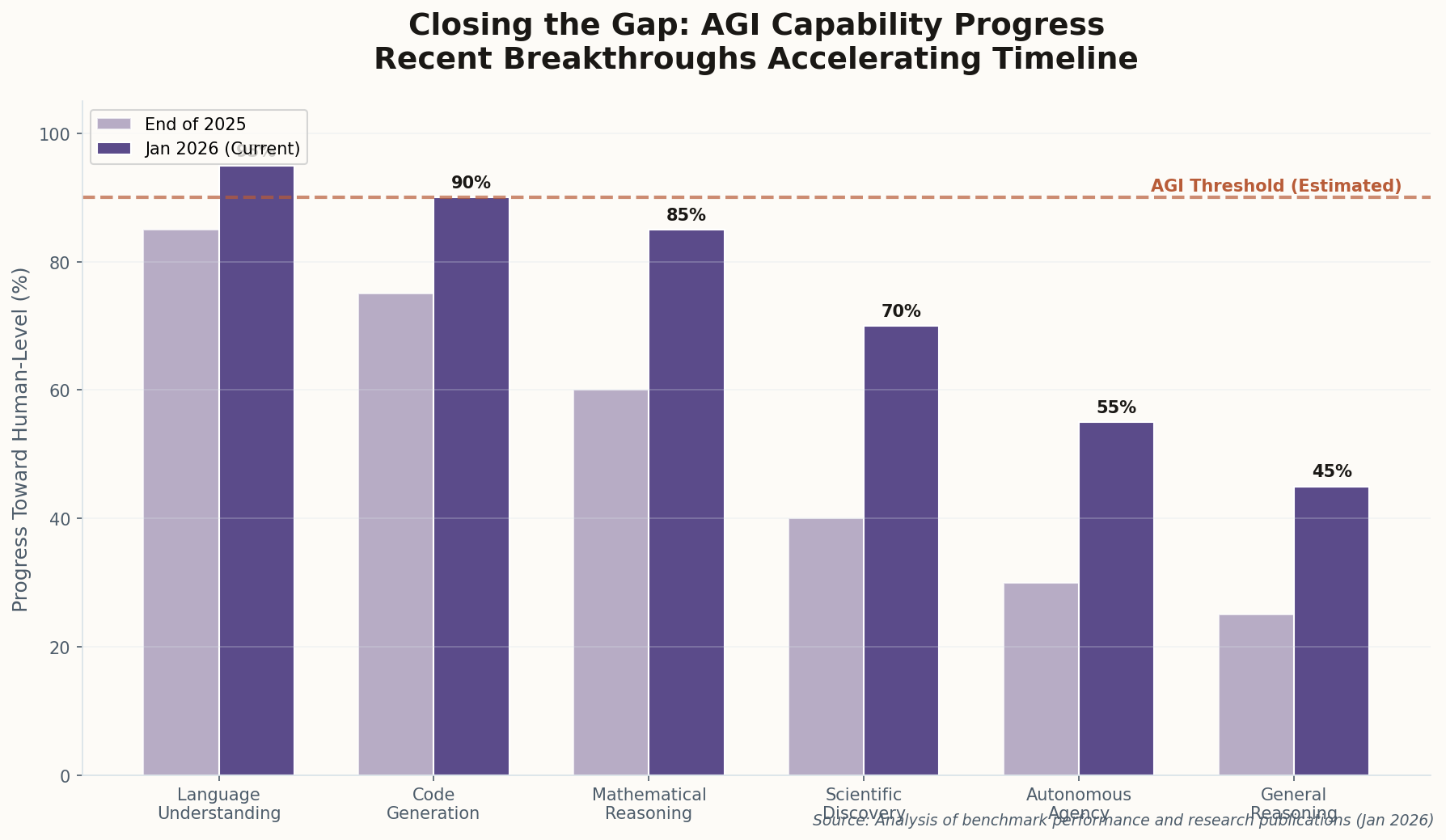

What does $100 billion buy? According to sources close to the deal, it buys the compute infrastructure necessary to train AGI. Not "advanced AI" or "more capable models"—AGI. The scale of capital validates what researchers have been whispering: the leading labs believe we're in the final stretch, and the limiting factor is no longer algorithmic insight but raw computational power.

The Amazon partnership is particularly telling. Bezos already has skin in the game through personal investments in Anthropic, but this is AWS putting corporate money behind OpenAI's vision. The cloud wars have become the AGI wars, and the hyperscalers are hedging by backing multiple horses. The implicit message: nobody wants to be the company that didn't invest in the technology that changes everything.