Your New Performance Review Metric: Agents Managed

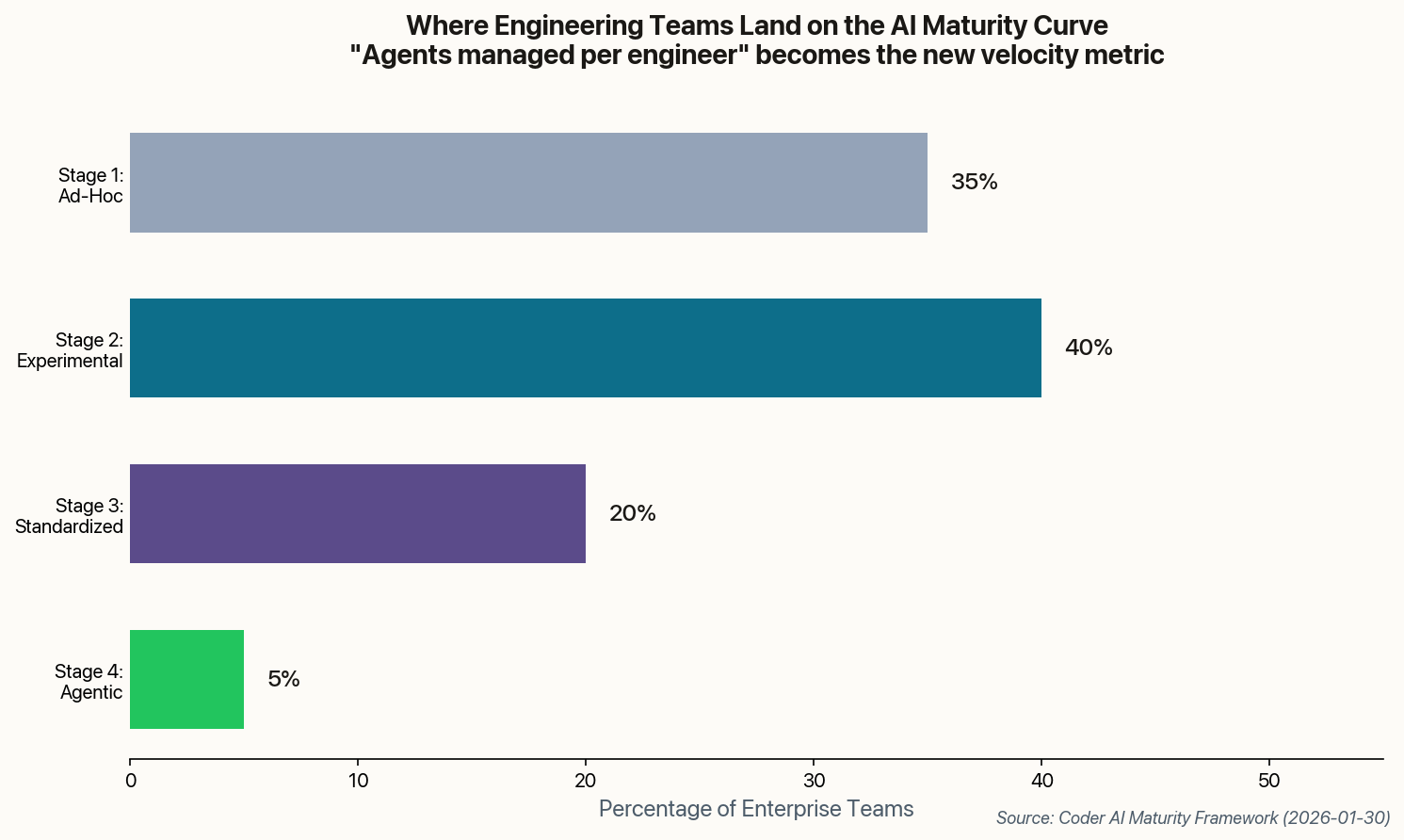

Coder just dropped a standardized framework that makes the quiet part loud: your value as an engineer is no longer measured in lines of code shipped. It's measured in agents orchestrated.

The new "AI Maturity Assessment" tool places engineering teams on a four-stage curve—from chaotic "ad-hoc" AI usage to the holy grail of "Standardized Agentic Workflows" where human coding is minimal. Stage 4 teams don't write code; they architect swarms of agents that do.

This isn't aspirational consulting-speak. It's a benchmarking tool designed to show your CEO exactly where your team lands compared to competitors. And here's the uncomfortable implication: if you're a developer who can't govern agents, you're now officially legacy infrastructure.