Anthropic Ships an Agent for the Rest of Us

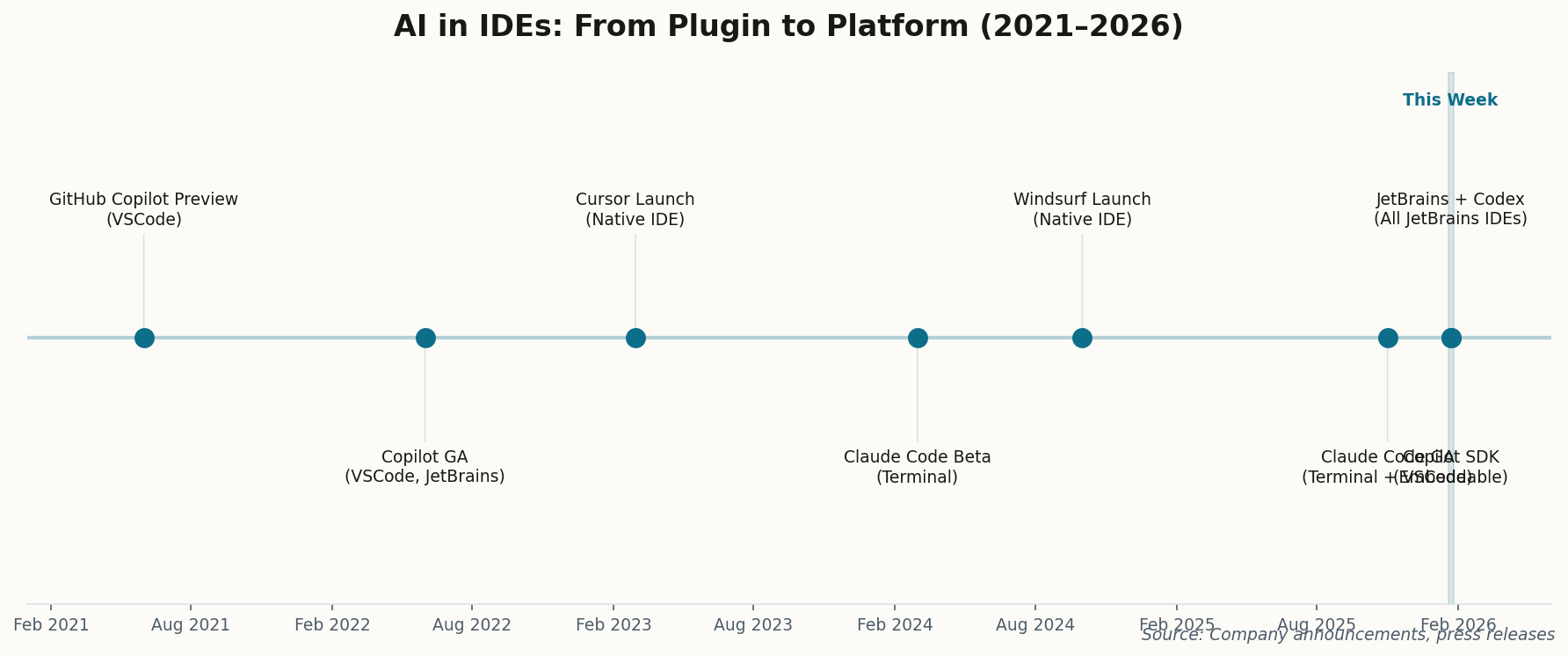

Anthropic launched Claude Cowork this week—a general-purpose AI agent for macOS that handles file management and document processing tasks. The real story isn't the product; it's what they built it with.

Cowork was created entirely using Claude Code, Anthropic's terminal-based coding assistant. That's the AI building its own autonomous offspring. Not as a research demo, but as a shipping product for everyday users who'll never touch a command line.

This marks a pivot point. For years, AI coding tools were developer utilities—useful, but contained. Cowork signals that the output of AI-assisted development can itself be autonomous software that works on behalf of non-technical users. The code-generation flywheel is spinning faster than most anticipated.

The implication: If Claude Code can build Cowork, what stops your company's AI assistant from building specialized agents for your specific workflows? The barrier to custom automation just dropped to "describe what you want."