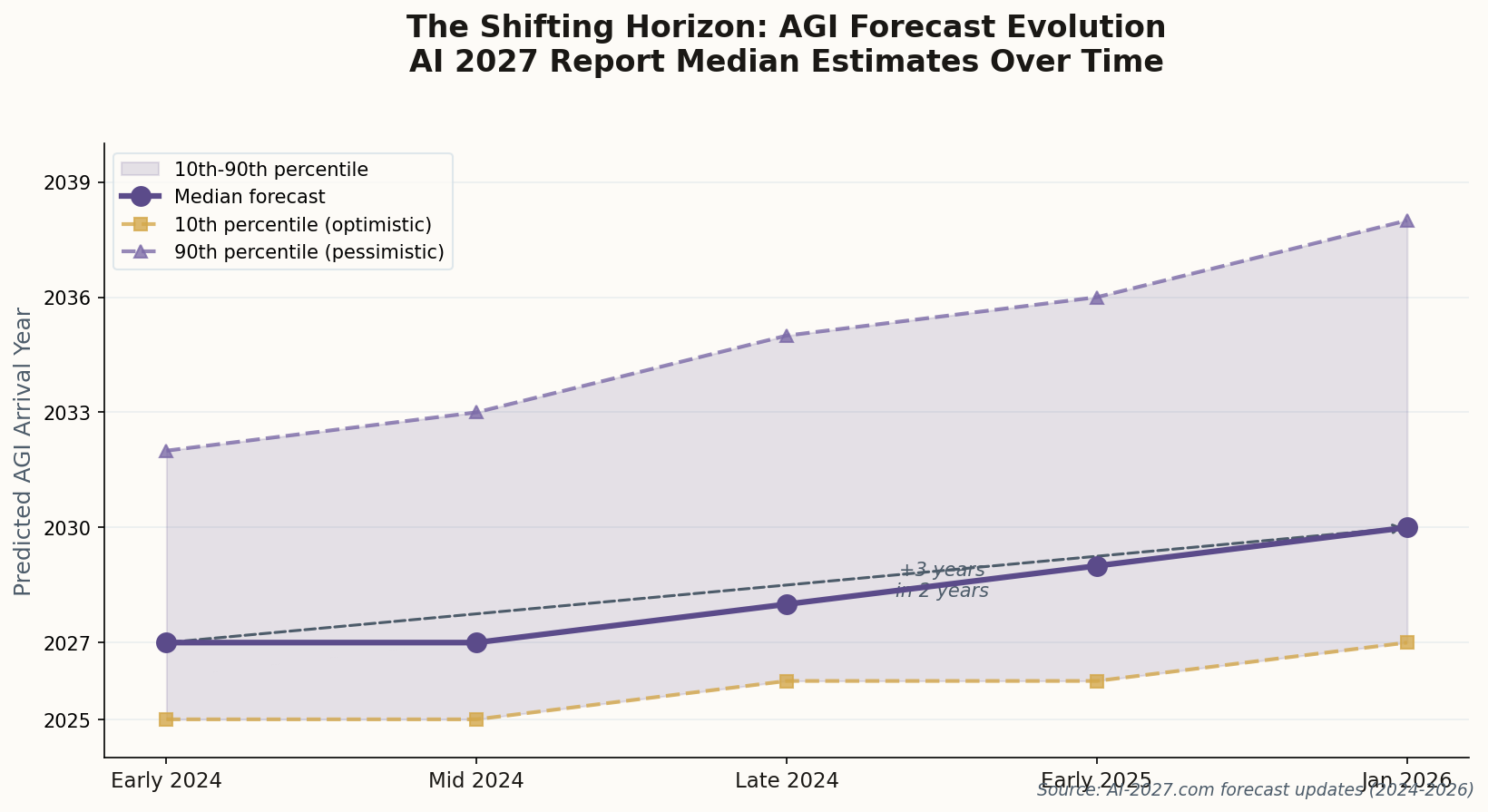

The Horizon Keeps Receding

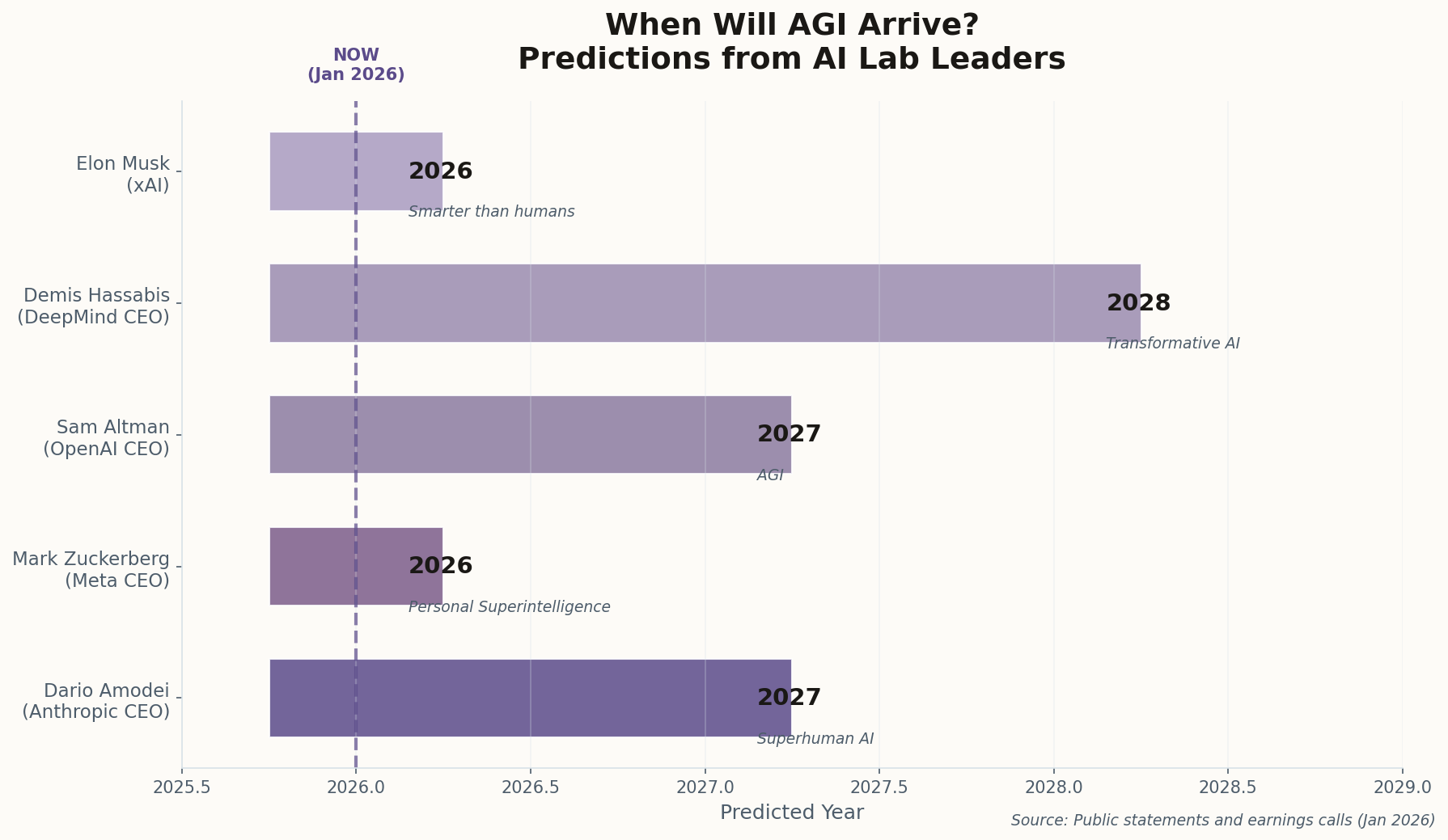

The influential AI 2027 report just quietly nudged its median AGI forecast from 2027 to approximately 2030. That's a three-year slip in just two years of predictions. If you're keeping score at home: when they launched in early 2024, the name was aspirational. Now it's looking increasingly like a historical artifact.

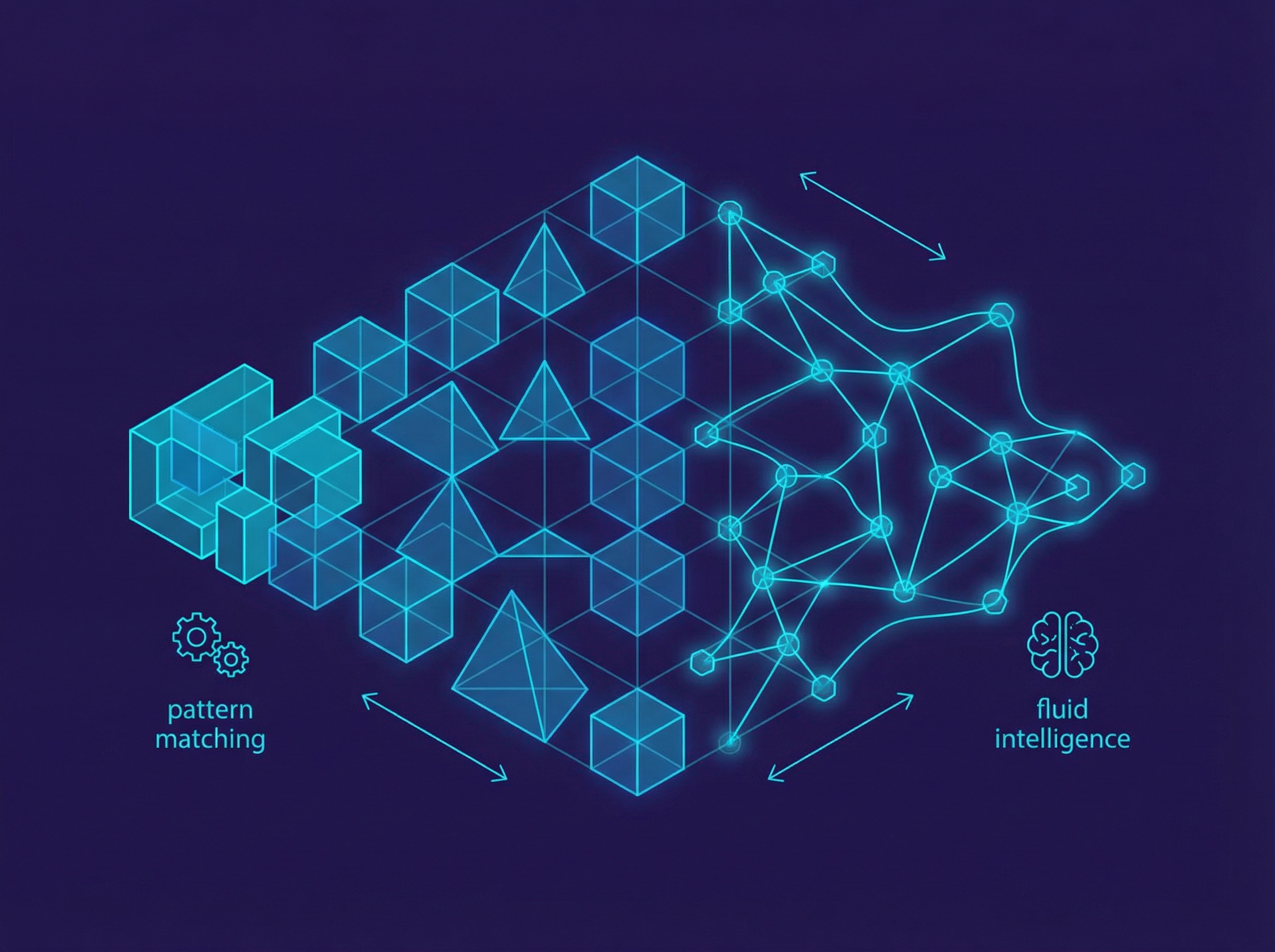

The rationale? "Slower-than-expected deployment of autonomous agents in 2025." Translation: the demos looked great, but getting AI systems to reliably do useful things in the real world is harder than making them impressive in controlled settings. This is the "last mile" problem that has plagued every previous automation wave, and AI is proving not immune.

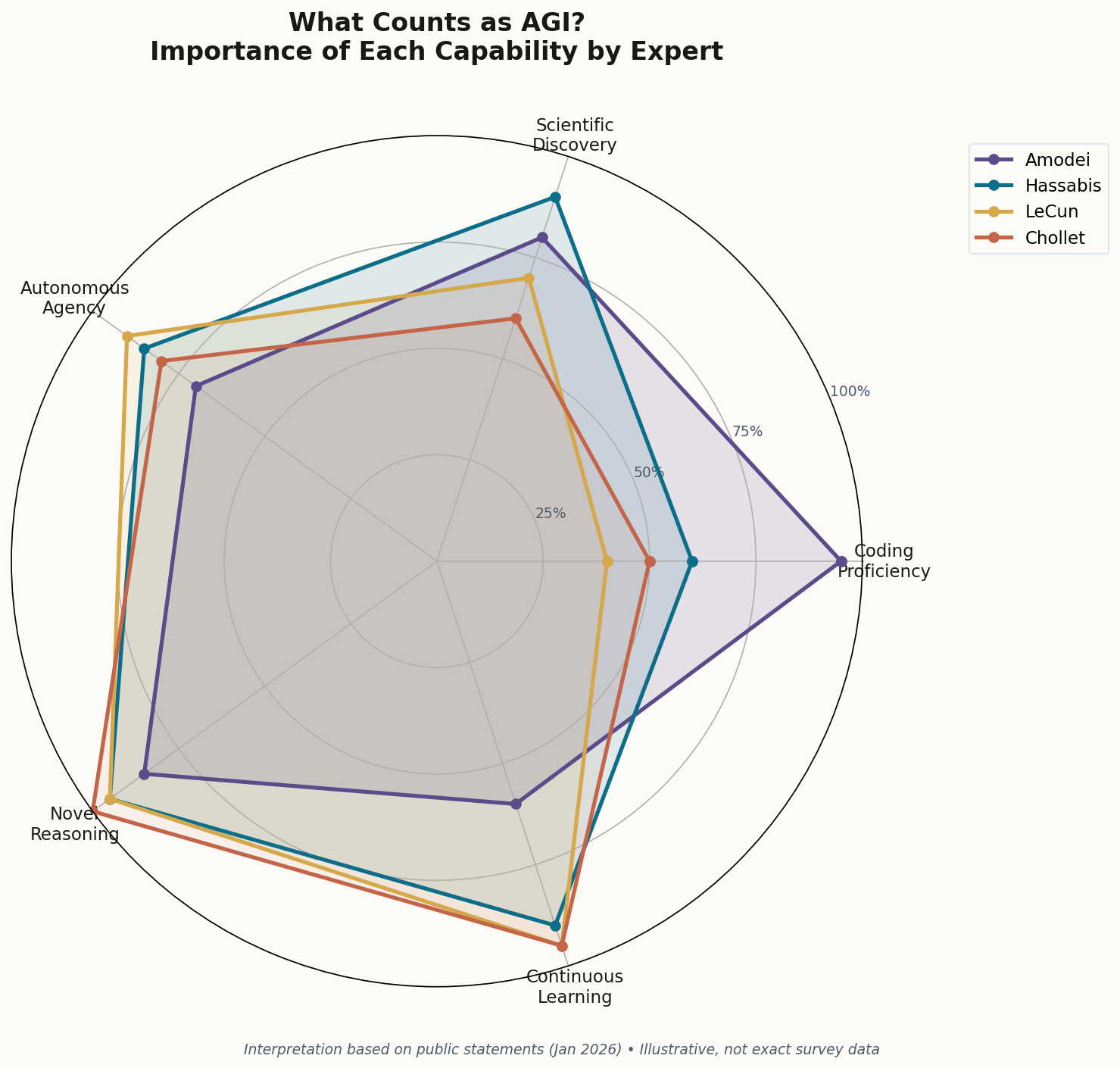

What makes this interesting isn't the specific date—it's the metacognition. The forecasters are adjusting in real-time based on observational data rather than doubling down on their original timeline. That kind of intellectual honesty is rare in prediction markets where reputations are built on boldness. The takeaway: even the most carefully reasoned AGI timelines are built on foundations of uncertainty.