Cursor Spawns a Swarm: Subagents Change the Game

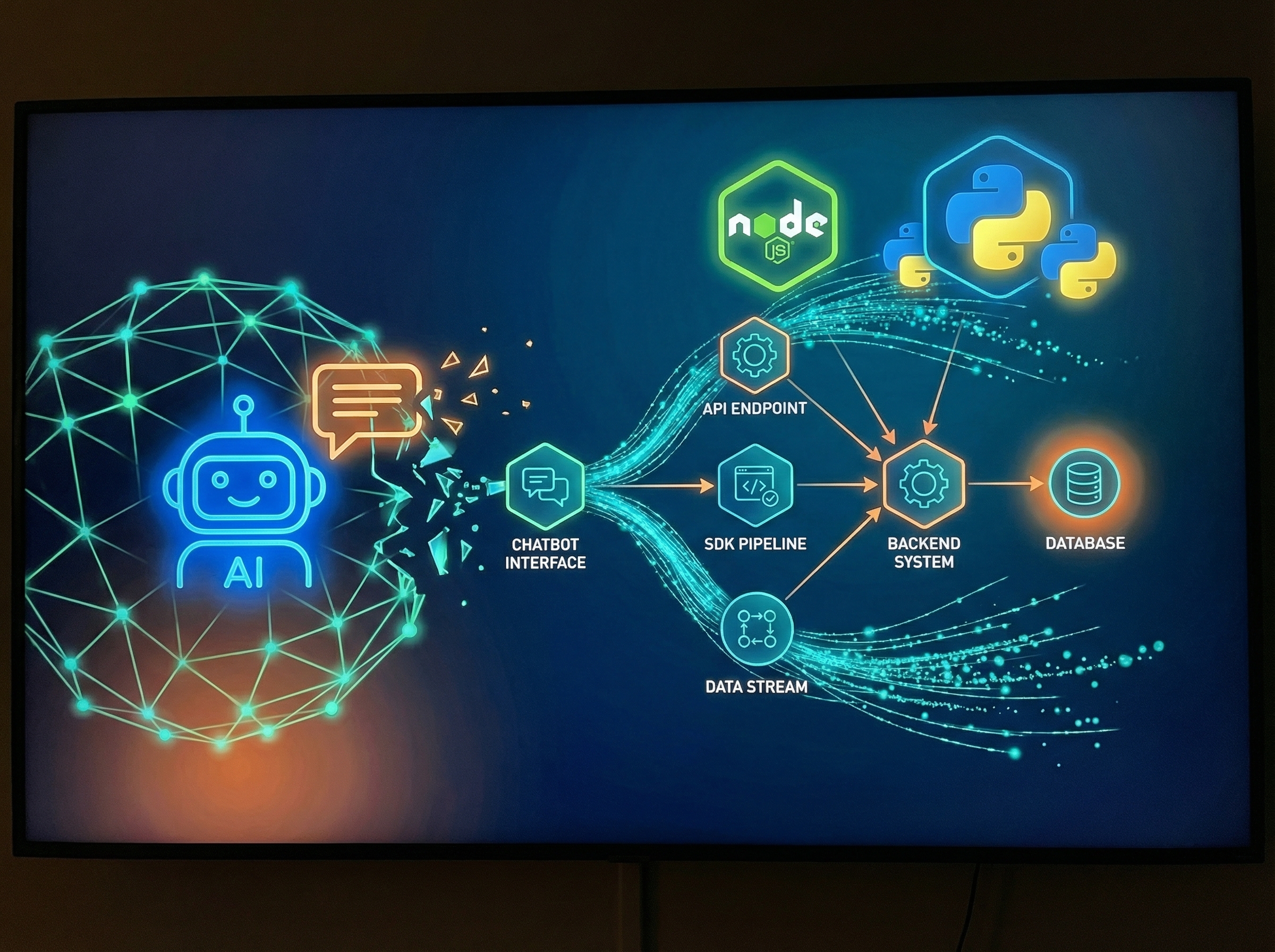

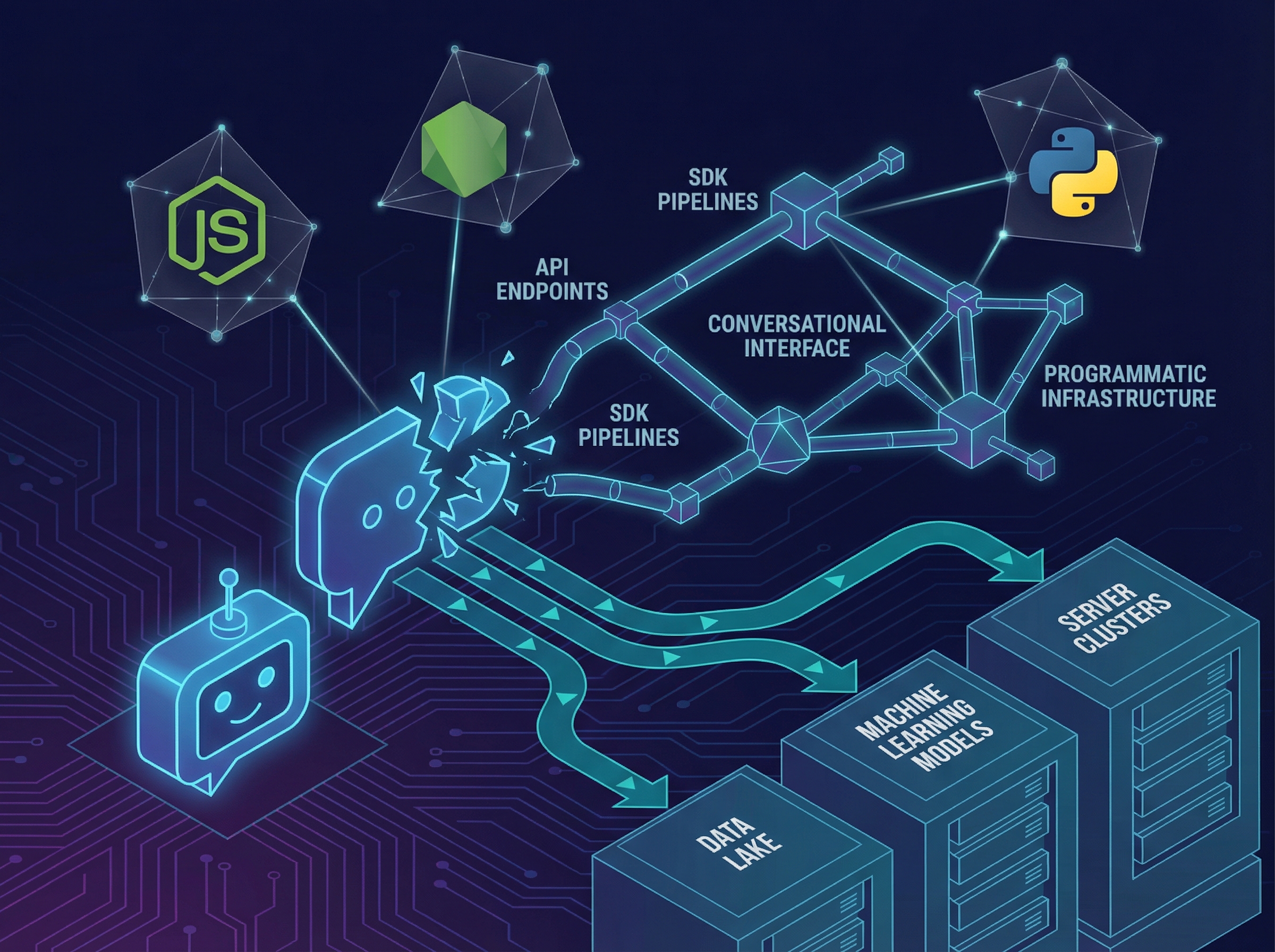

Forget autocomplete. Cursor v2.4 doesn't just help you write code—it delegates. The new Subagents feature lets the main AI spin up independent, task-specific agents that handle parts of a larger objective in parallel. One agent refactors your authentication module while another writes tests and a third updates the documentation. You watch the diff roll in.

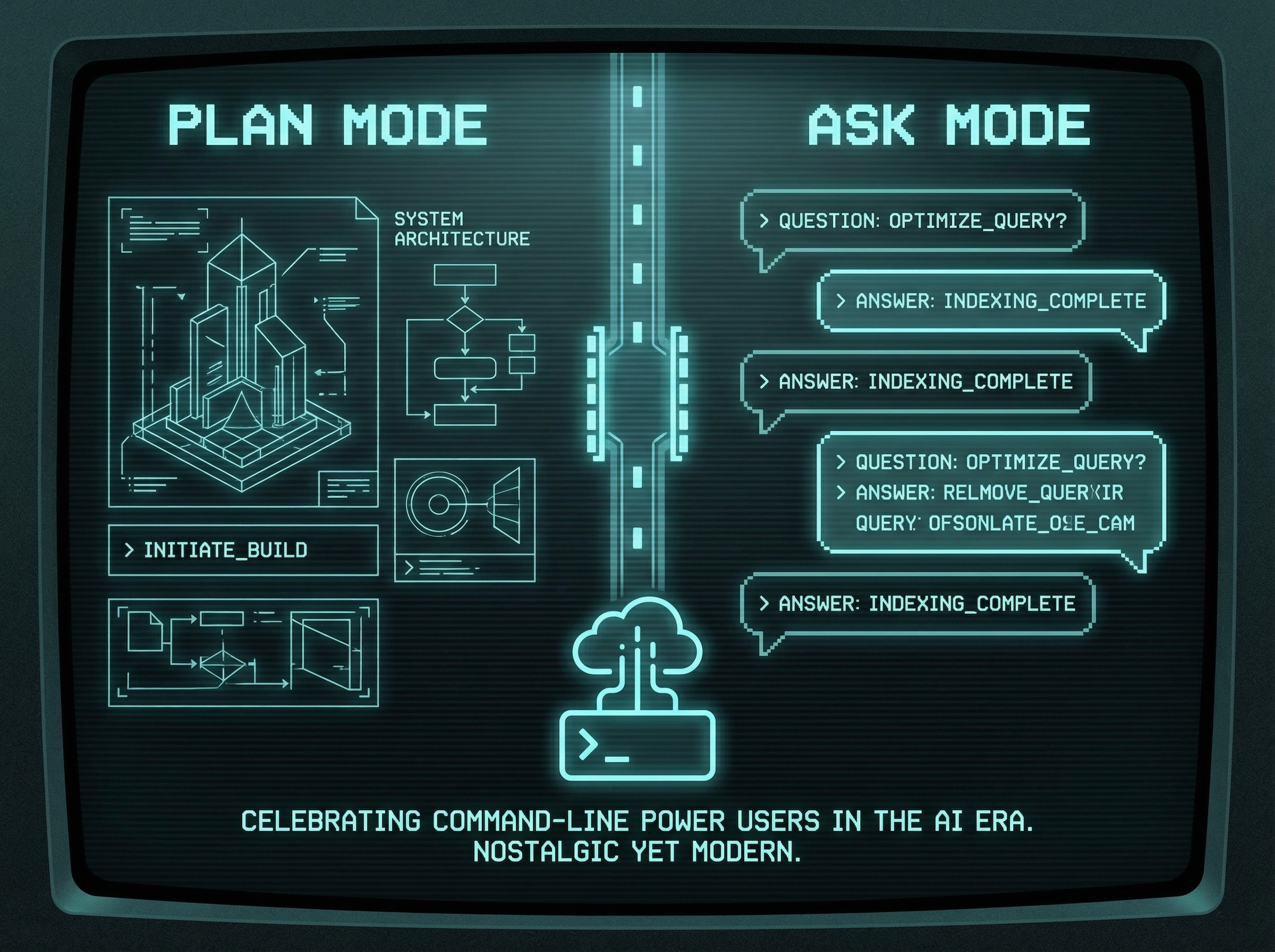

This isn't incremental improvement; it's a categorical shift. For years, AI coding tools operated as souped-up typeahead: you write, they predict, you accept or reject. Subagents invert the model. You describe the goal, the AI decomposes it, and multiple workers execute simultaneously. The developer's role shifts from author to orchestrator.

Two other features in this release tell us where Aman Sanger's team thinks this is heading. Image Generation is now built directly into the editor—powered by models like Google's Nano Banana Pro—collapsing the barrier between code and creative assets. And Cursor Blame, available to Enterprise users, distinguishes AI-generated code from human-written code in git history. That last one sounds like a feature. It's actually an admission: we're going to need to know who (or what) wrote this.

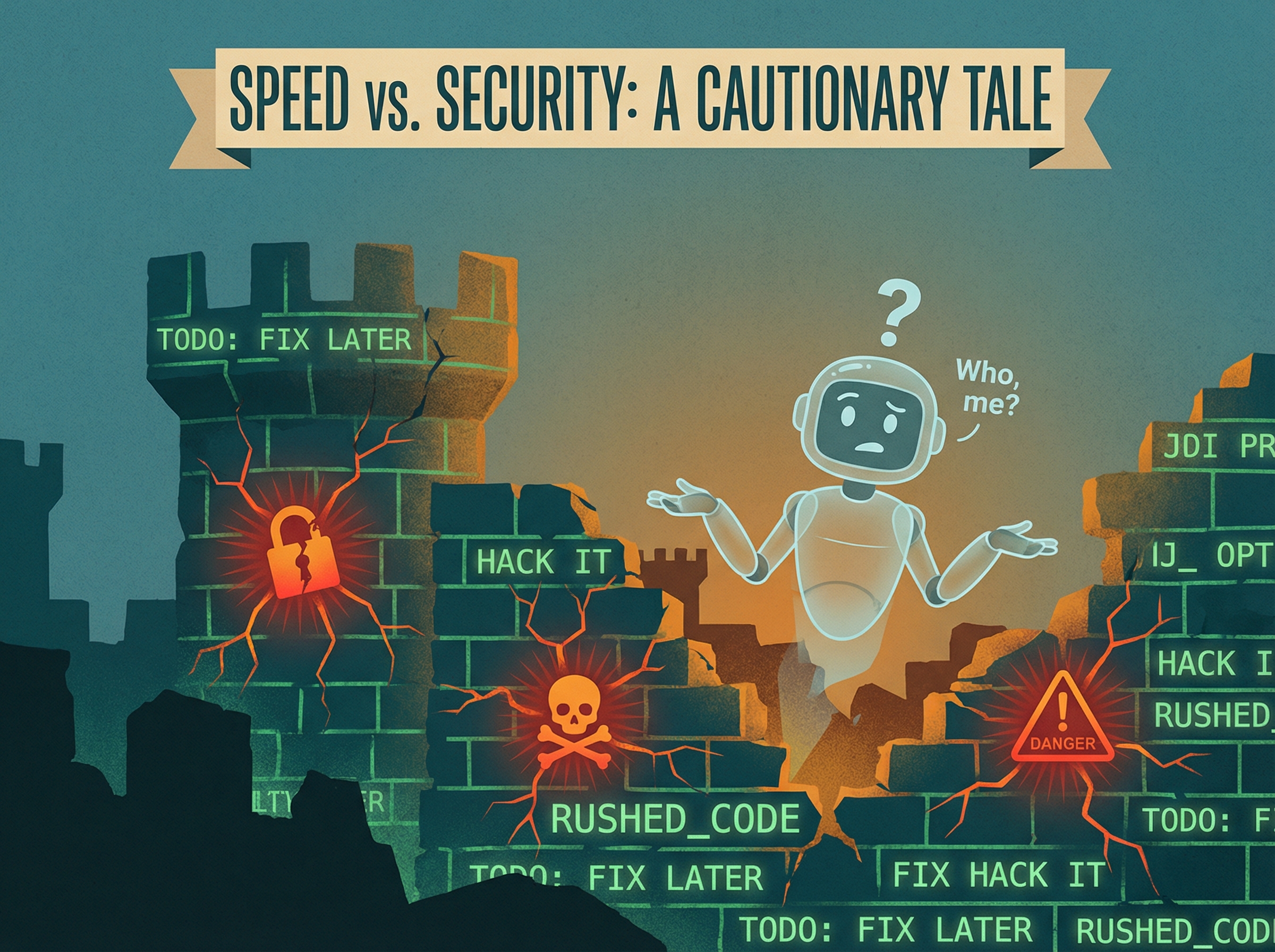

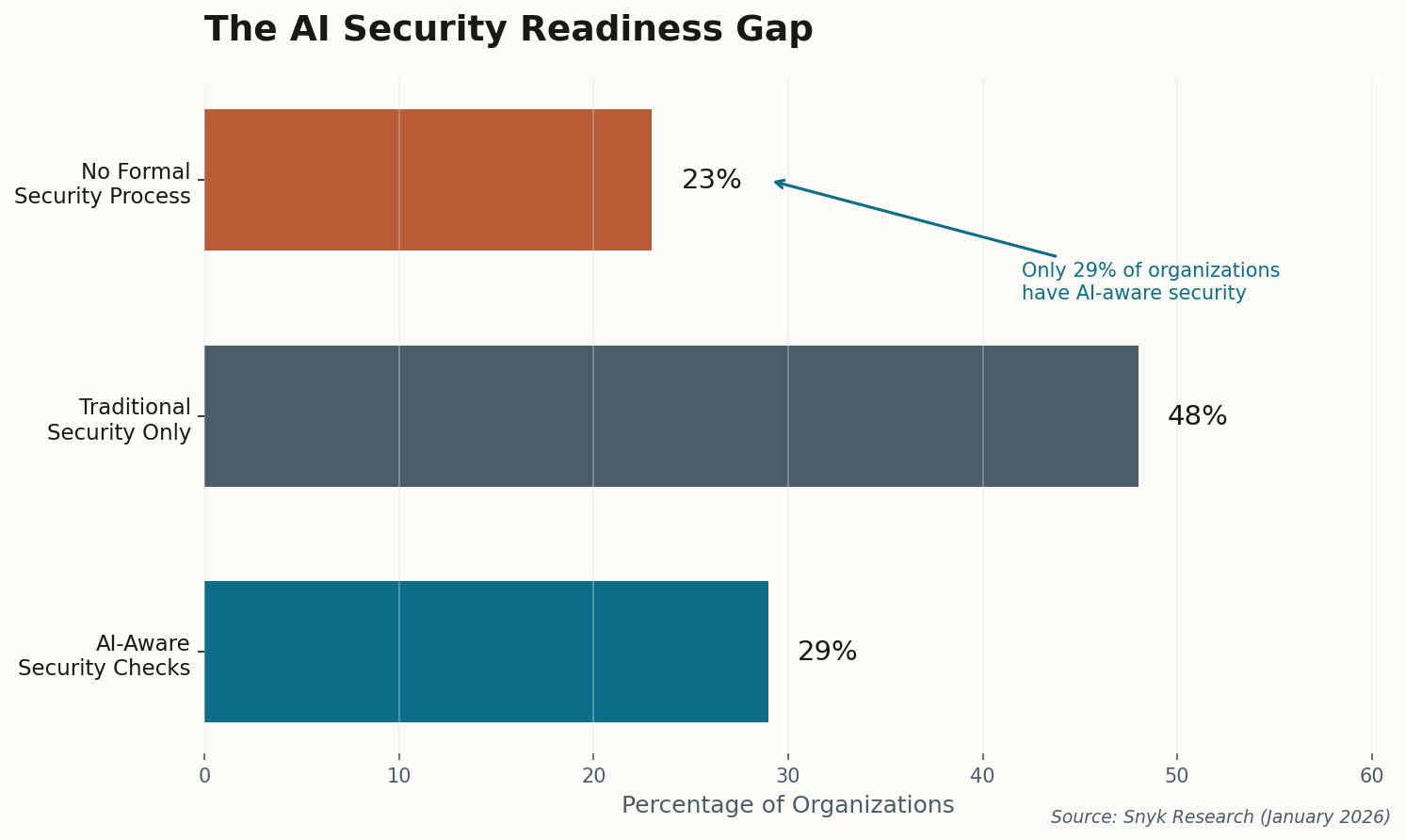

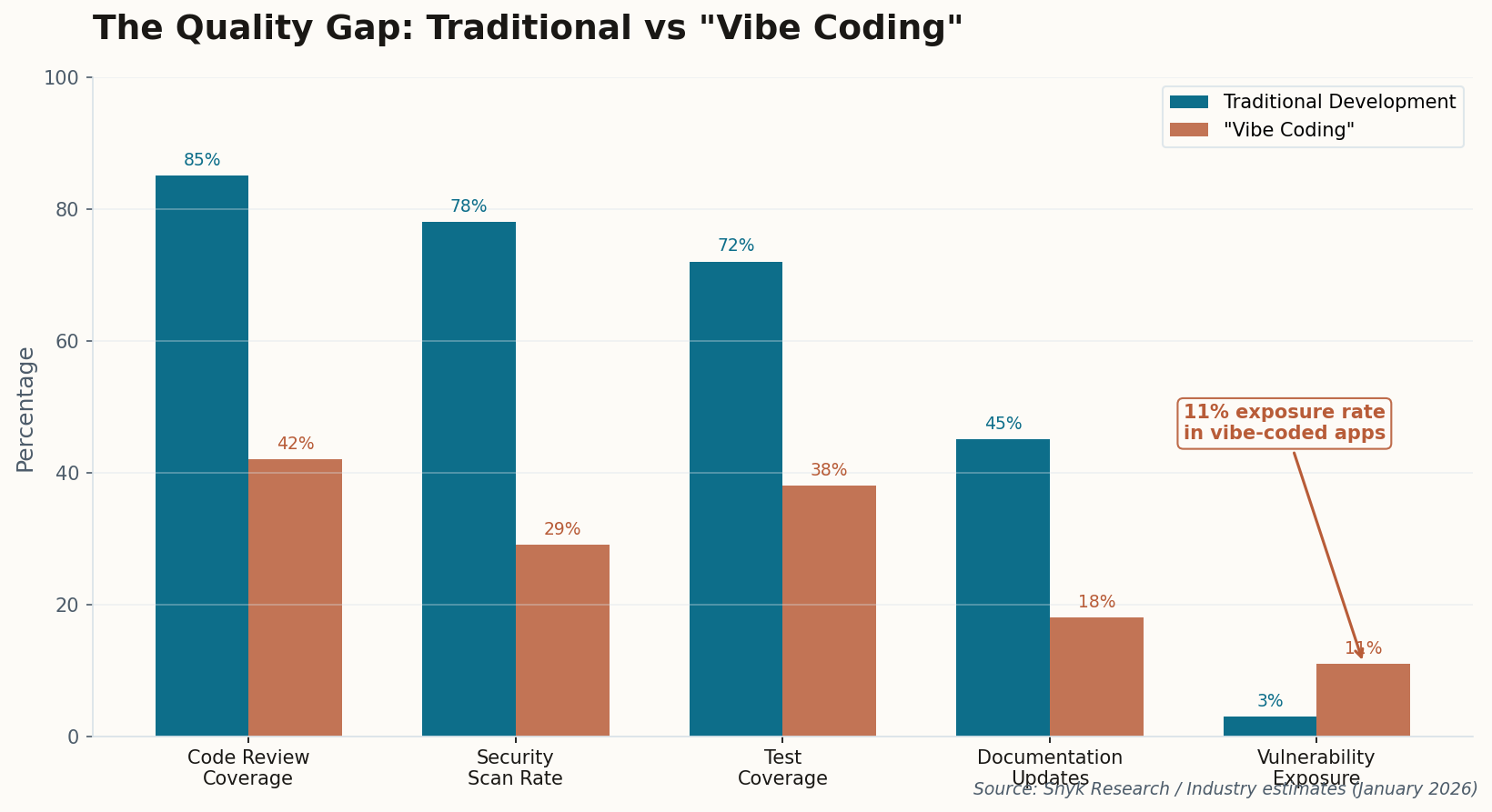

The question isn't whether you'll use autonomous agents for coding. It's whether you'll trust them without a human checkpoint—and whether your organization's security posture is ready for code that writes itself.