Cursor Spawns Subagents, and Everything Changes

The latest Cursor update (v0.44+) crosses a line that most AI coding tools have only flirted with: genuine autonomy. The new "Subagents" feature allows the main AI to spawn up to eight parallel worker streams to tackle complex refactors, while the parent agent orchestrates the chaos.

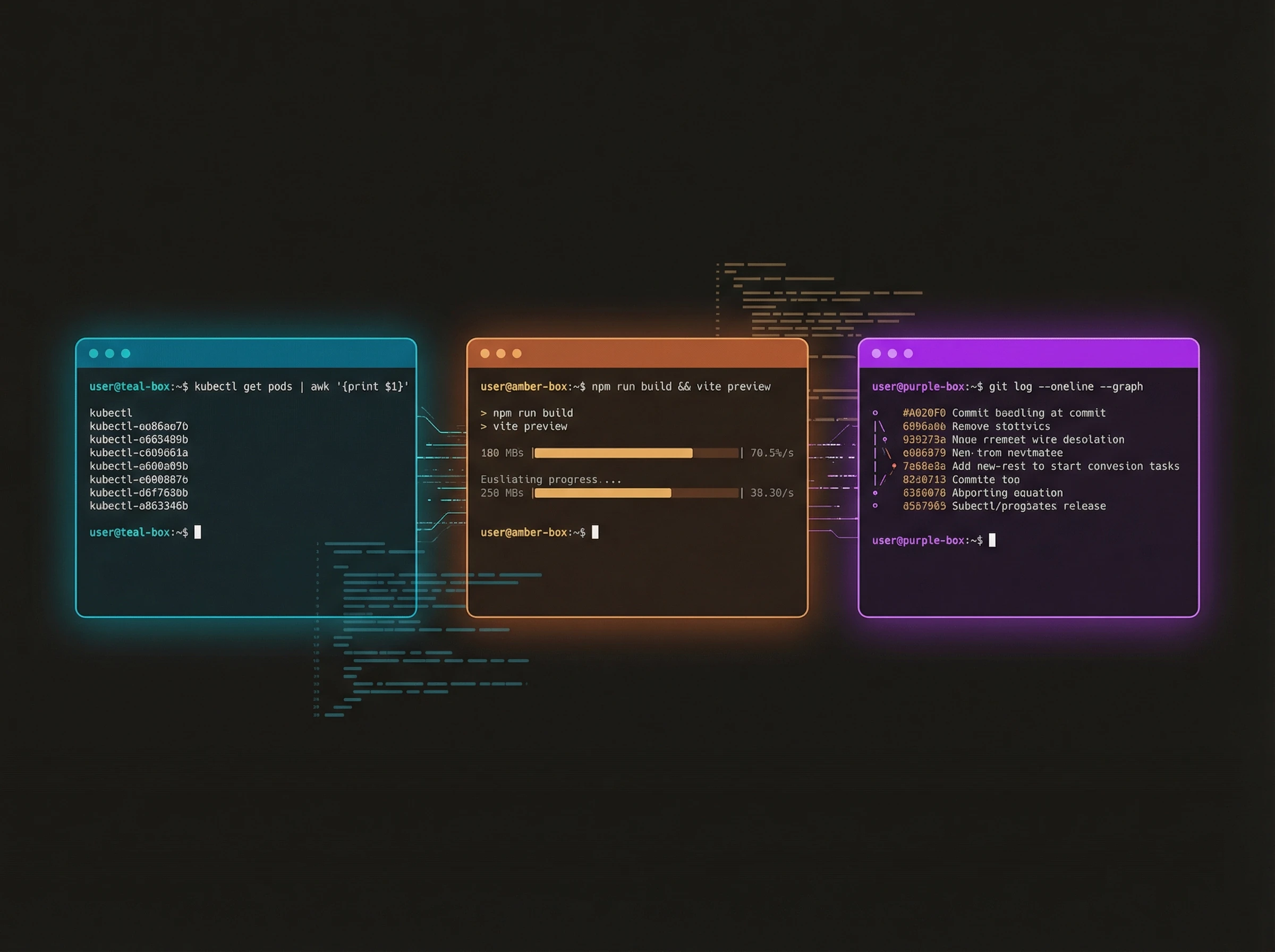

But here's the part that matters: it can now ask clarifying questions mid-task instead of guessing. This sounds trivial until you've watched an agent confidently delete your authentication middleware because it "seemed unused." The human-in-the-loop is no longer constant supervision—it's surgical intervention when the AI knows it doesn't know.

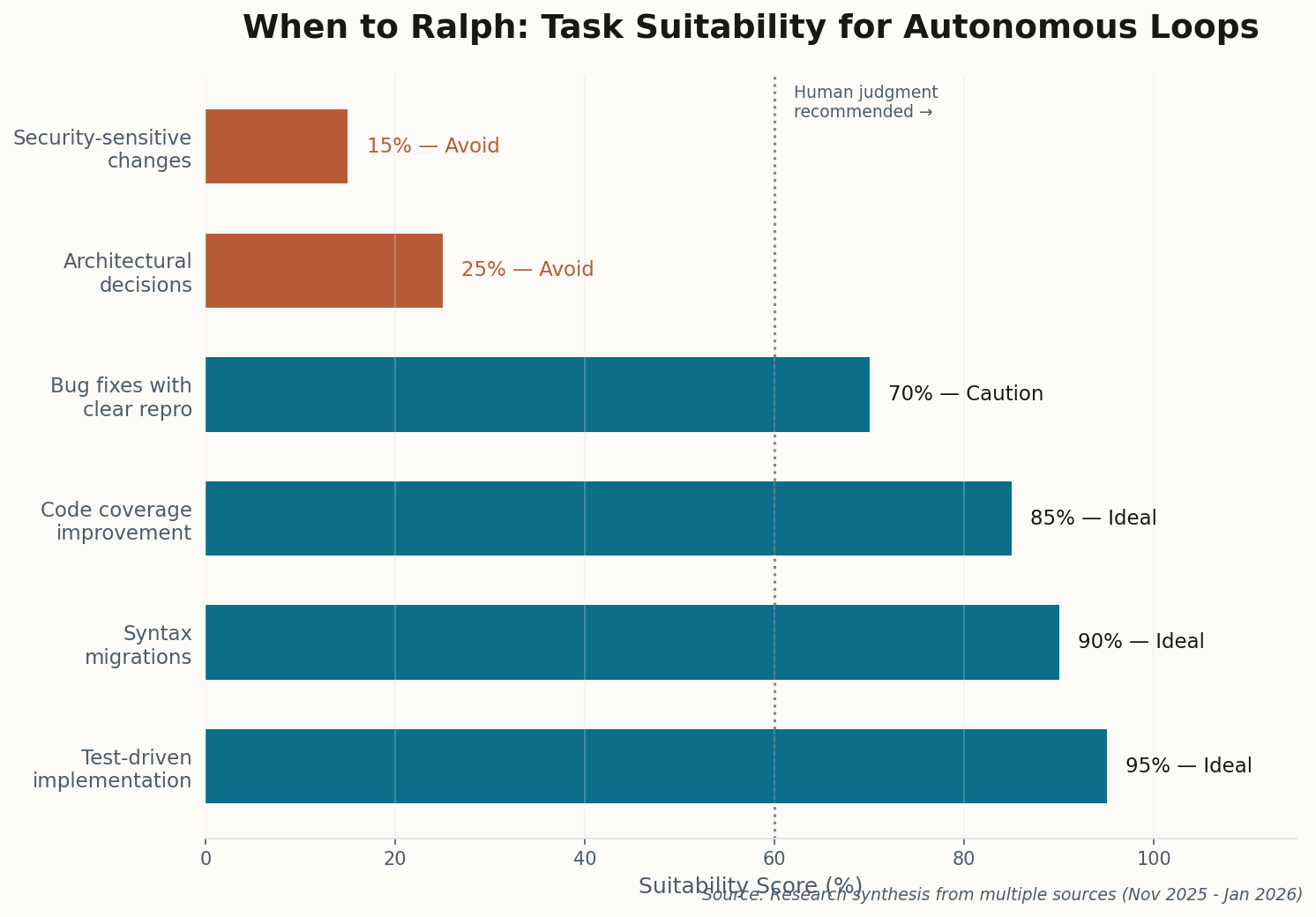

The implications run deeper than productivity gains. If an AI can spawn workers, ask for help when stuck, and coordinate multi-file changes without losing context, we're no longer talking about autocomplete. We're talking about delegation. The question is whether you're delegating to a junior developer or a very confident intern with root access.