Carney's Davos Masterclass: Why Classical Storytelling Still Wins

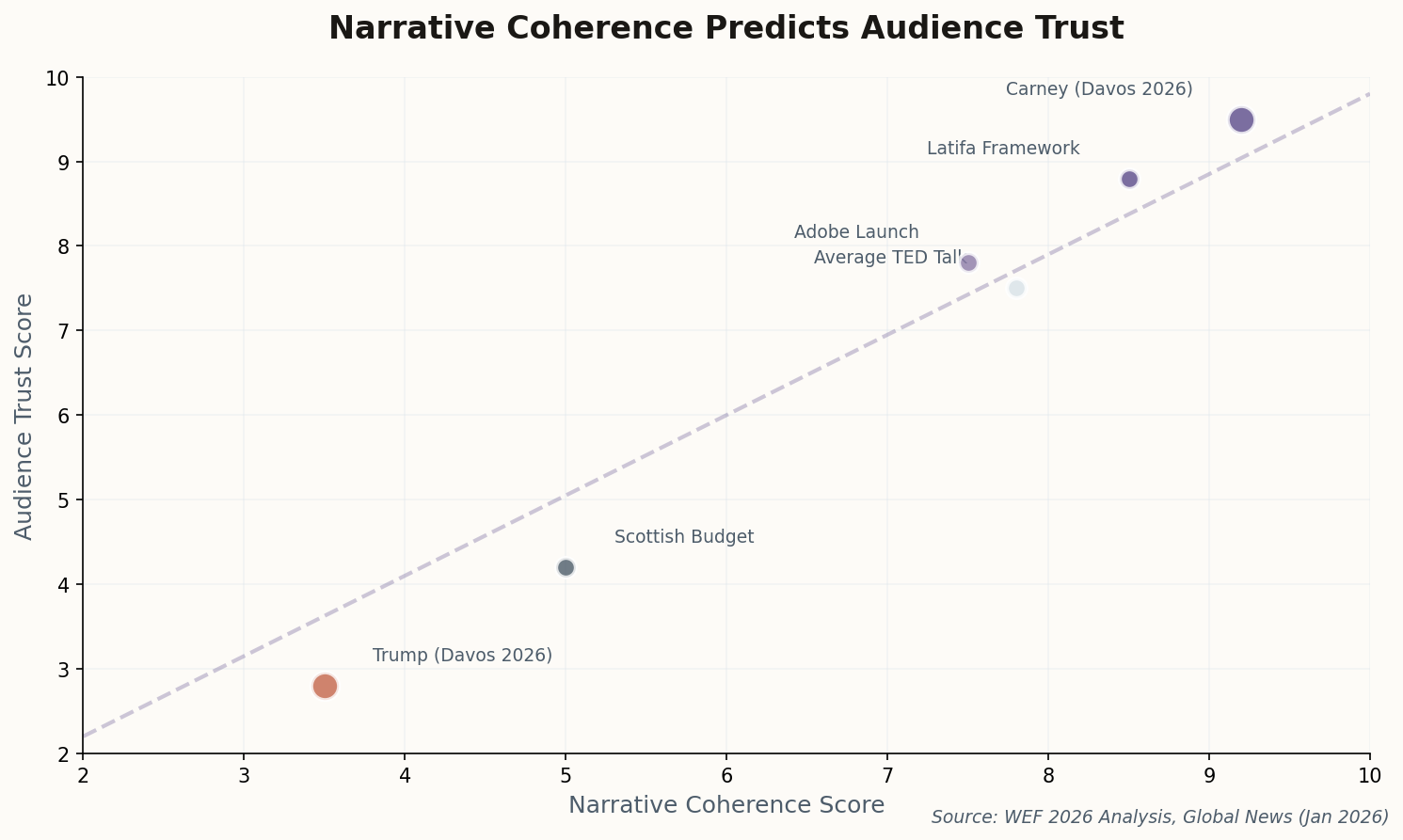

Canadian Prime Minister Mark Carney walked off the World Economic Forum stage to something that rarely happens in Davos: a standing ovation. Not for flash. Not for tech demos. For narrative architecture.

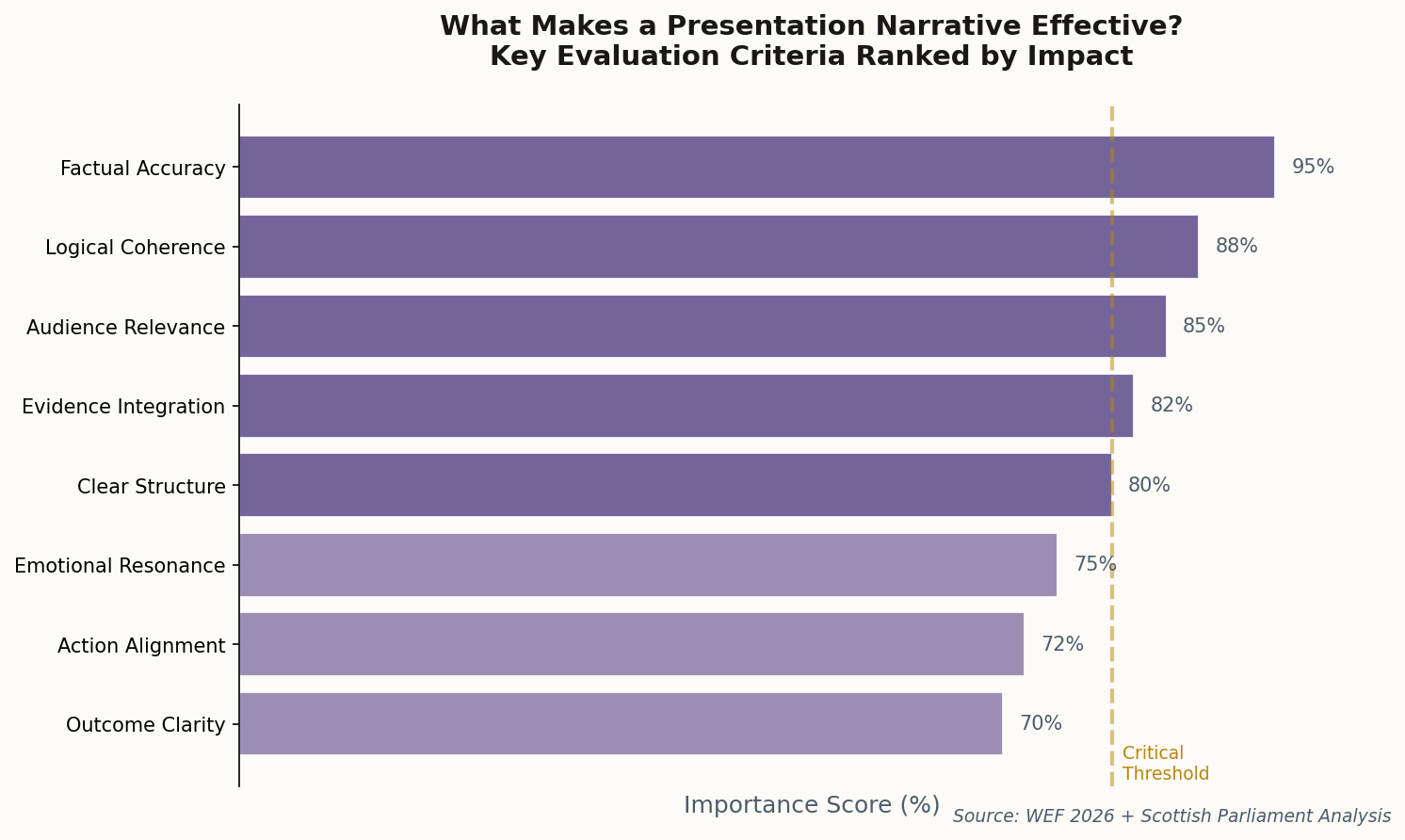

The analysts who dissected his speech afterward found something instructive: Carney built his argument on a "past vs. future" structure that reframed geopolitical uncertainty without resorting to jargon. His vocabulary was precise but accessible. He repeated key themes only where emphasis demanded it—never as filler. When he argued that "the rule-based order is fading," he followed immediately with a concrete vision for Canada's role, not vague aspiration.

The speech succeeds because it follows a principle that presentation coaches have always known but rarely see executed: lead with stakes, layer in evidence, close with actionable implications. Carney didn't just tell the audience what was happening—he helped them understand what it meant for them.

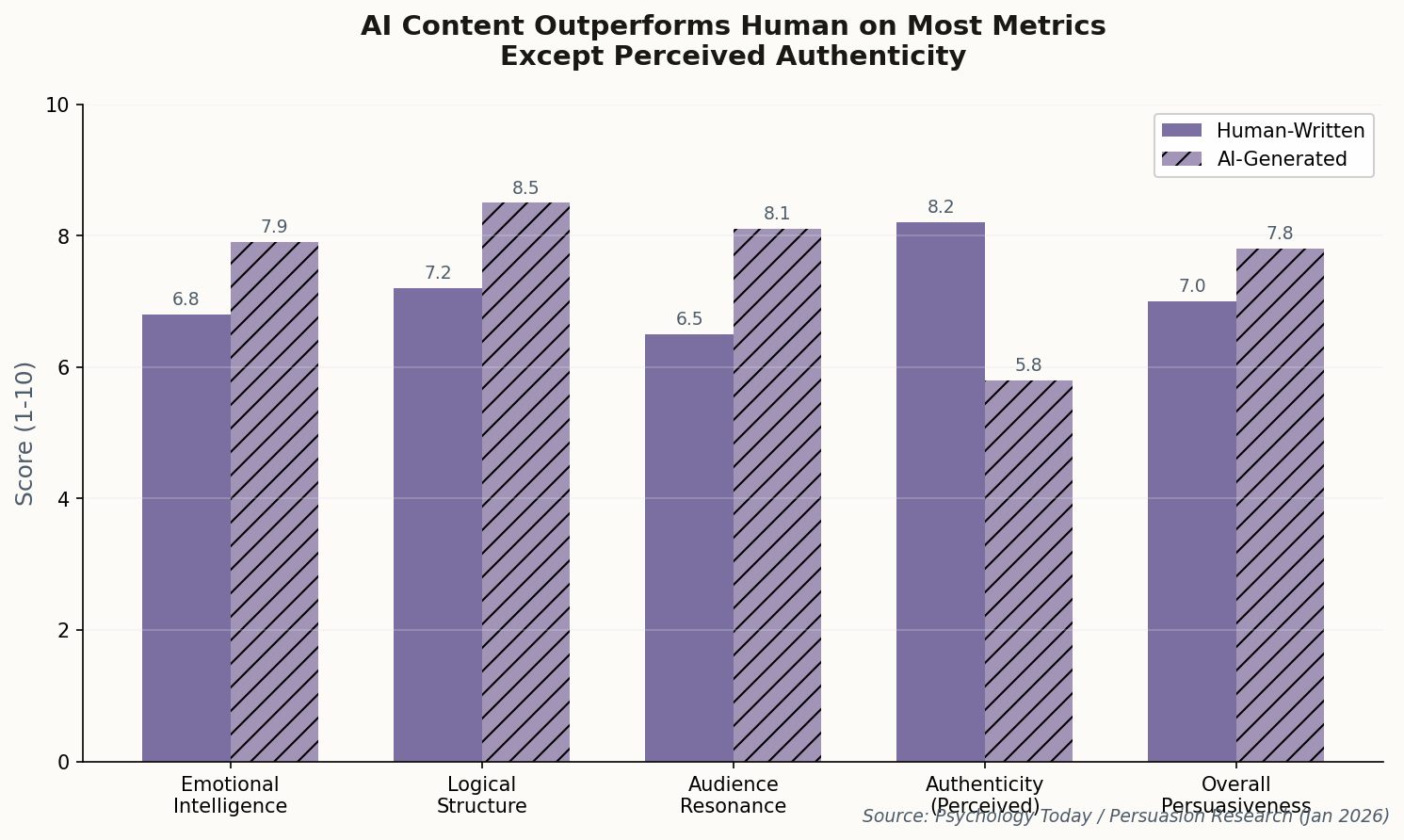

What makes this worth studying: in an era when every other keynote is powered by AI teleprompters and real-time sentiment analysis, Carney proved that classical rhetorical structure—clear vocabulary, minimal repetition, subtle allusions—still outperforms the gimmicks. The ancient tools work when wielded by someone who understands their power.