Washington Finally Notices the Lights Flickering

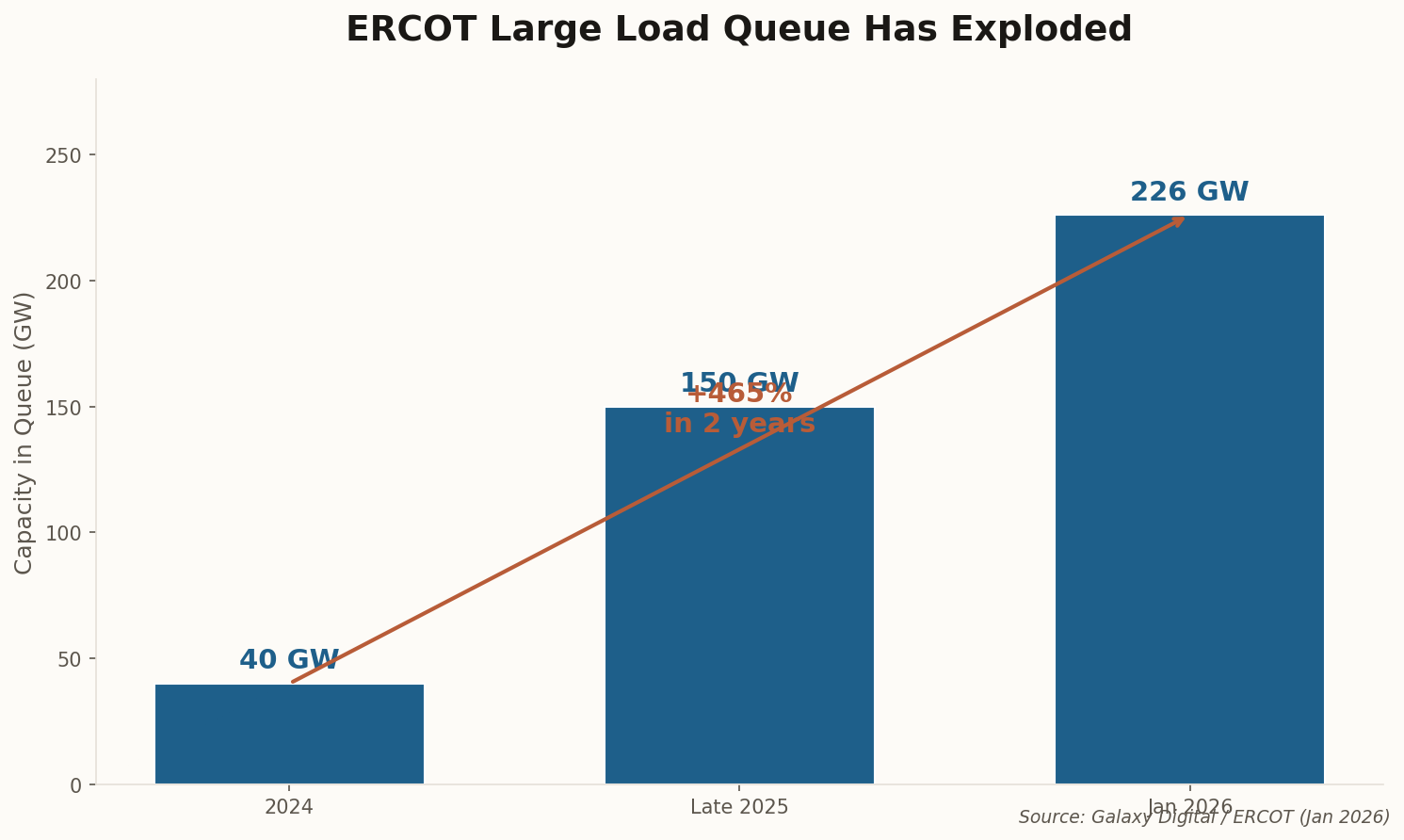

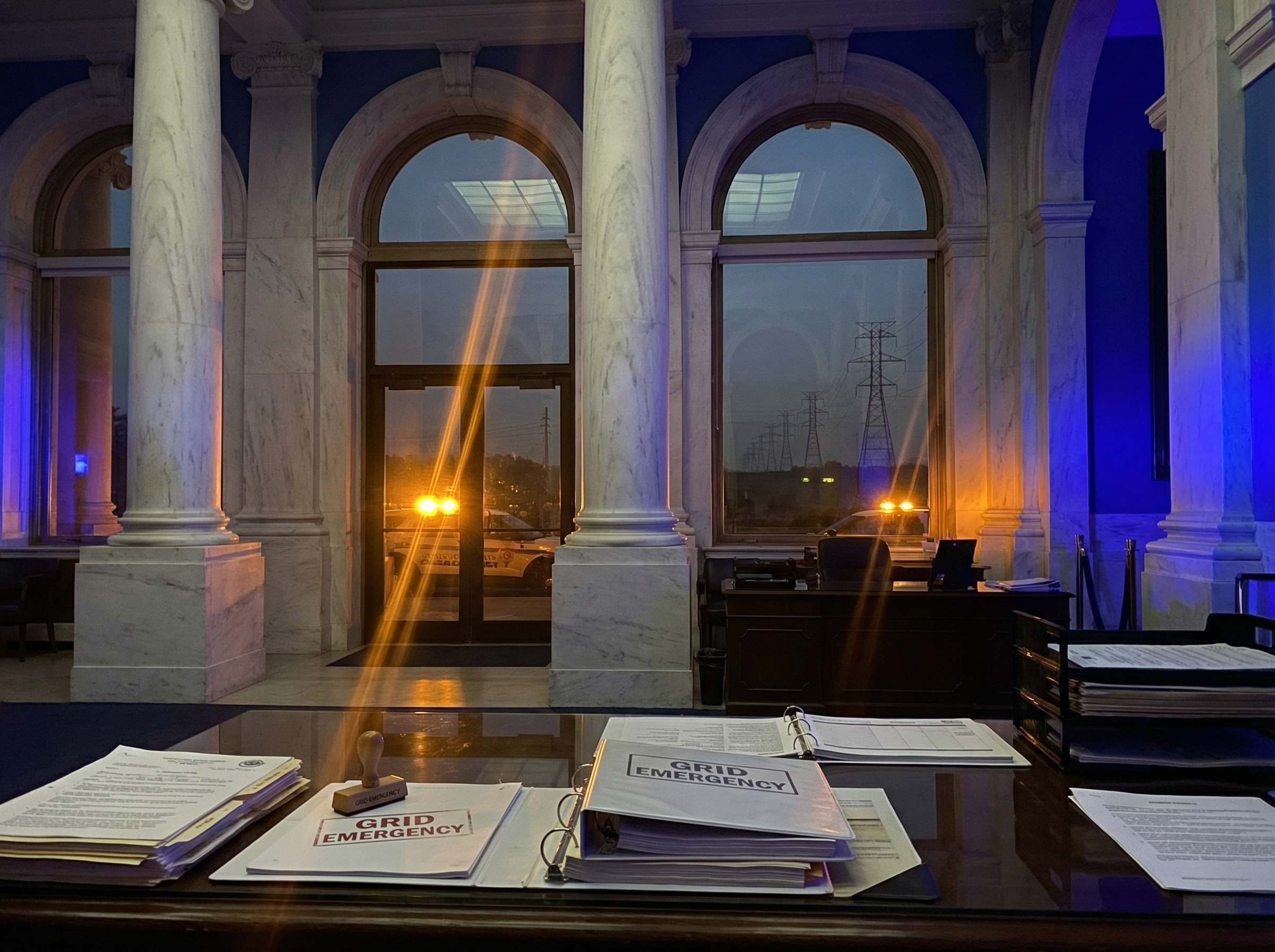

When the White House convenes an emergency meeting with bipartisan governors to discuss something as unglamorous as power grid capacity, you know we've passed a threshold. This week, that meeting happened—and the subtext was clear: the AI boom is straining American infrastructure to breaking point.

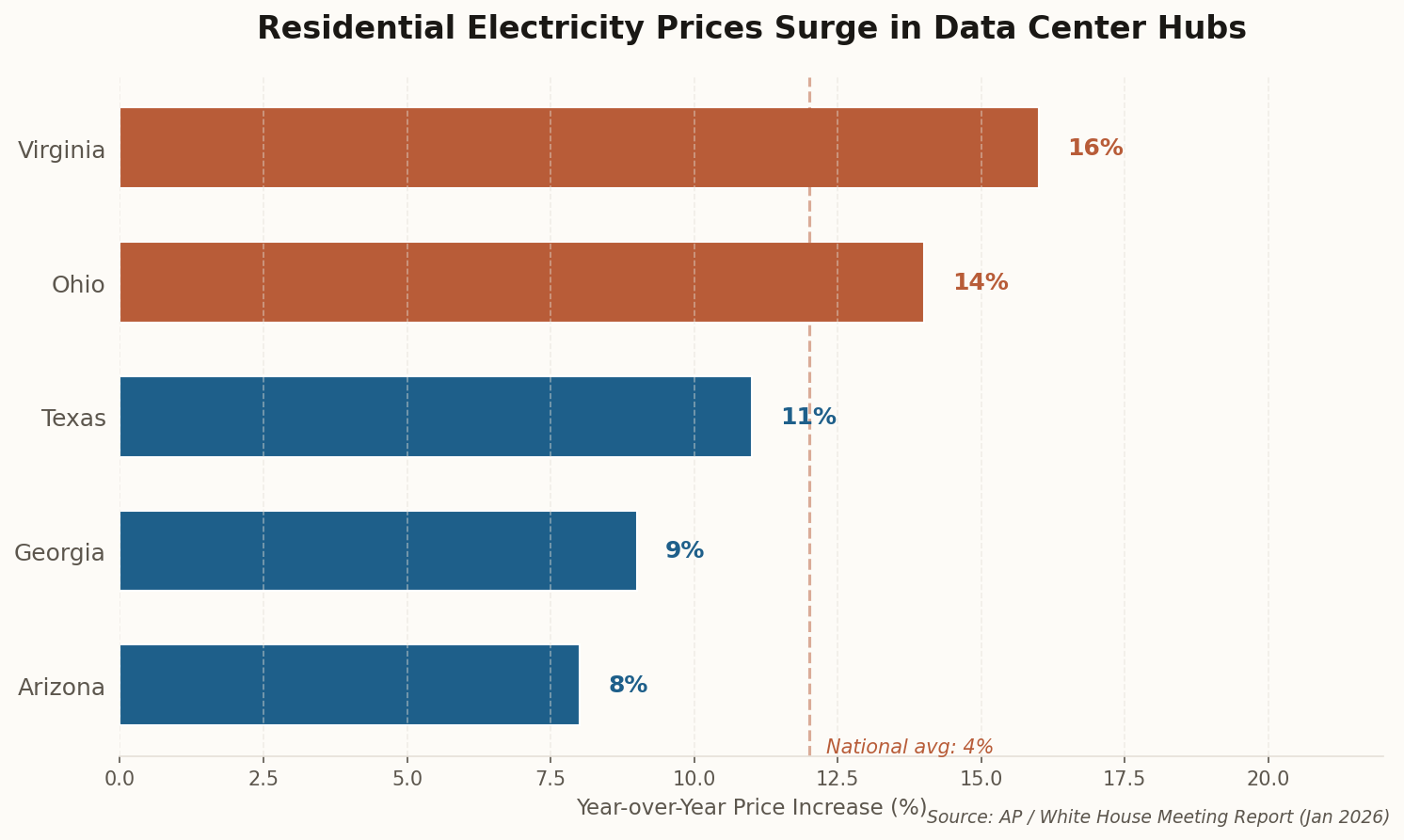

The immediate trigger? PJM Interconnection, the grid operator serving 65 million people across the mid-Atlantic and Midwest, faces such severe capacity constraints that officials are now urging emergency power auctions to incentivize new plant construction. The governors present represented states where residents have seen electricity bills surge 12-16% in a single year—increases directly attributable to data center load subsidization.

This marks a fundamental shift. For years, states competed aggressively for data center investment with tax breaks and cheap land. Now the calculus is inverting: those data centers demand so much power that the surrounding infrastructure cannot keep pace, and ratepayers are funding the gap. The federal government's involvement signals this is no longer a regional nuisance—it's becoming a national economic and security concern.