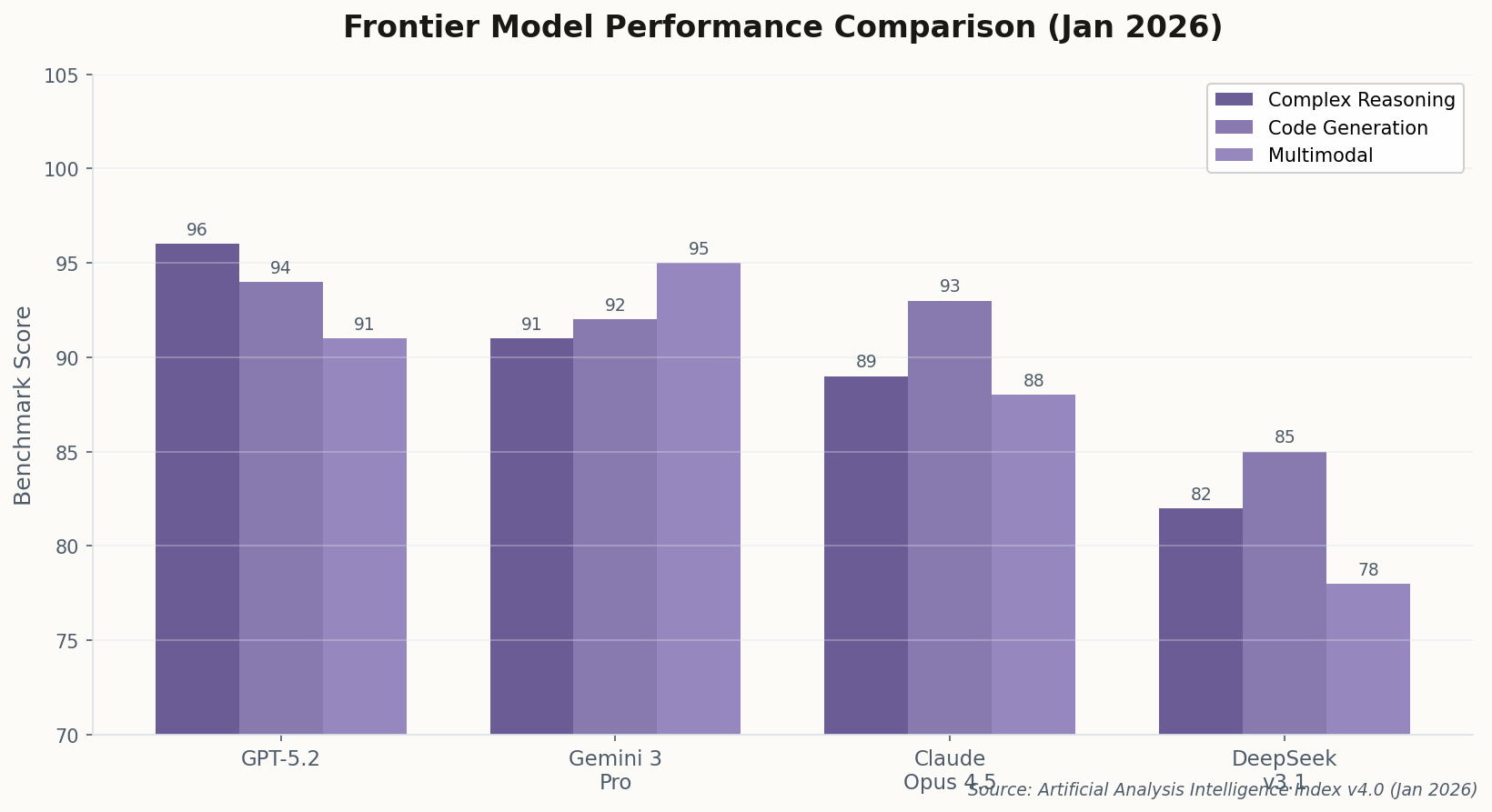

Gemini 3 Goes Enterprise, Gmail Becomes Your AI Assistant

Google's Gemini 3 Pro is now available for enterprise customers and integrated directly into Gmail. The pitch: "proactive assistance" that drafts responses, surfaces relevant context, and handles conversational search before you ask.

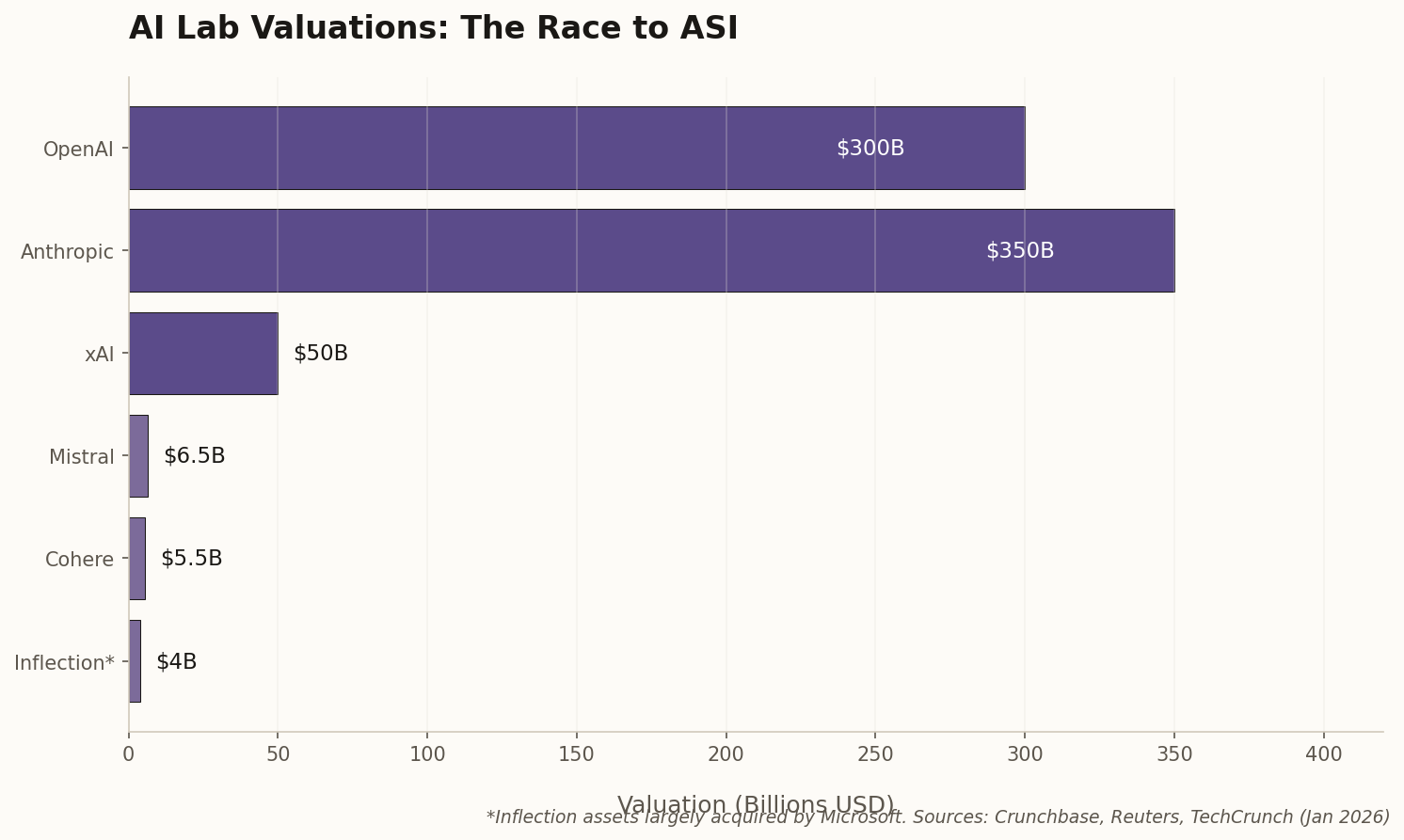

This is Google playing its distribution card. While OpenAI builds standalone products and Anthropic chases enterprise deals, Google is embedding advanced LLMs into the workflows of 3 billion Workspace users. The strategy is clear: don't ask people to change their habits—meet them where they already are.

The "proactive" framing is telling. We're moving from "chatbots you invoke" to "assistants that anticipate." Whether users find this helpful or creepy will determine whether Google's integration play succeeds or triggers a privacy backlash.